The Application Security domain has evolved significantly over the last decade. It’s no surprise then, that with this evolution, comes a jungle of tools that not only causes a lot of confusion, but also a lot of noise, and overlapping messages. A few years ago, as DevSecOps and shift-left concepts started to gain momentum, many popular SCA tools came to market––Snyk, Mend (formerly WhiteSource), and even GitHub that added native SCA functionality through Dependabot. This was an important step for the security industry, but it also introduced new levels of alert fatigue that started to overwhelm developers.

SCA and CSPM tools would alert about tens of thousands of vulnerabilities every scan, and these numbers would only grow at a thousand-CVE-scale with every new library introduced.

The traditional application security approaches have left us with tons of alerts, which are prioritized only based on the CVSS score - which is a vanity metric for security posture, because it does not take critical factors into account when prioritizing alerts. Enter Oligo, and in particular the concept of runtime application security, which is gaining traction in the industry. Oligo Security came in to address some of this known pain through deep runtime application reachability and visibility.

To date, there are two popular methods to implement runtime application security, however, these come with very different capabilities in results, despite using similar messaging. In this post, we’ll walk through these methods, the level of reachability and visibility they truly provide when it comes to runtime application security, and what you need to know to keep your systems secure and reduce alert fatigue for your engineers.

Runtime Application Security - Executed vs. Loaded Libraries

Many tools today understand the need for runtime application security, with the growing number of exploits and the devastating impact breaches have on companies. Companies need to have true coverage vs. just ticking some regulatory compliance box, this, however, is always easier said than done. That is why many of them are turning to runtime application security to provide a real-time view into their systems as they execute and run the software, to ensure they are protecting themselves from known, and possibly even unknown, exploits.

The benefits of runtime application security are many––from real-time threat detection to preventing zero-day attacks, and better control over your systems and stacks as a whole. Yet, the difference between what you get when trusting your tooling to deliver on runtime application security is quite diverse in its results.

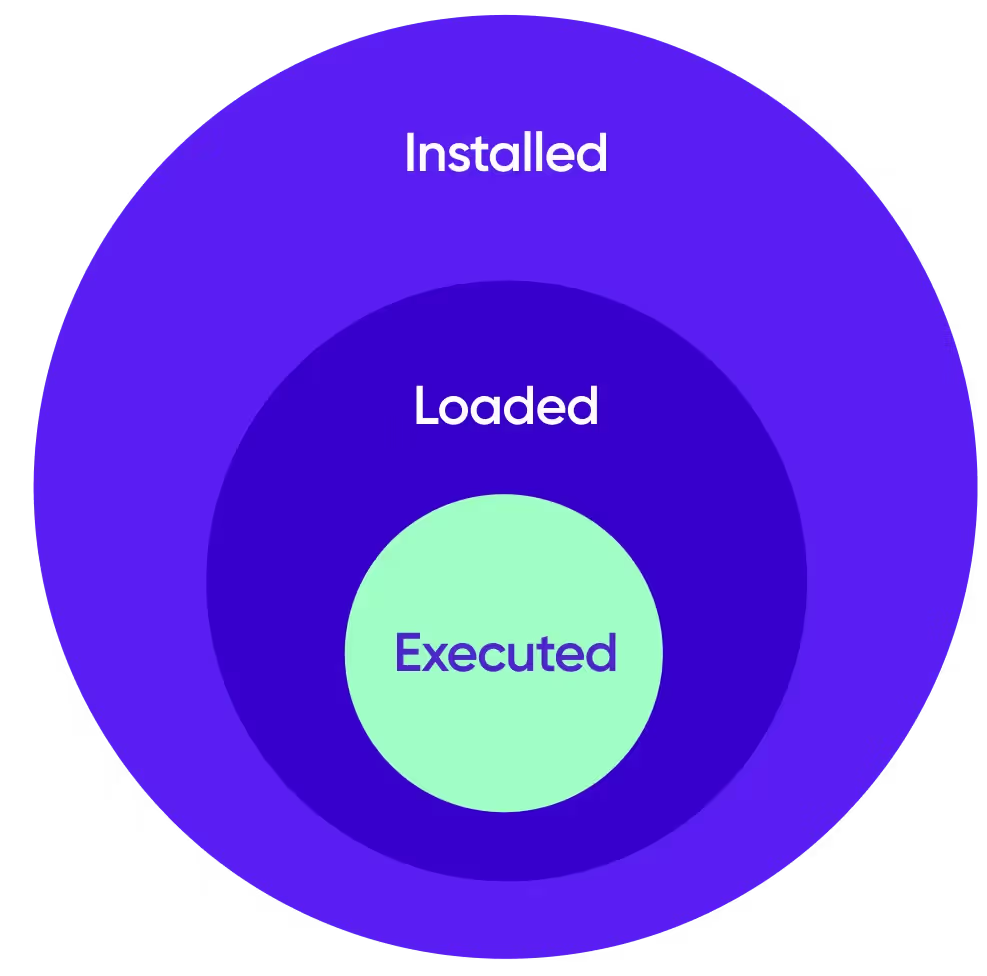

Like many parts of engineering, when developers write code, not all of the code is utilized, loaded, or executed during runtime at all. This difference is quite significant when it comes to outcomes and true positive results.

Knowing the difference between when a library or file is being executed during runtime can be a huge difference in the number of vulnerabilities your teams truly need to address, minimizing real potential risk to systems. To know the difference, there are several methods of runtime application security employed today that try to assess the reachability of vulnerable libraries in systems.

The Versatility of TensorFlow: A Dive into its Ecosystem and Dependencies

As an example, let's take a look at TensorFlow. TensorFlow, an end-to-end open-source platform for machine learning, is designed to provide a wide range of capabilities for AI practitioners.

It provides a complete ecosystem, with a bunch of features:

- Training AI Models - data loading, optimizers, loss functions, training-related code

- Experiment tracking with TensorBoard

- Drivers and Libraries for HW acceleration (CUDA, XNNPack)

- Saving and Loading checkpoints (Load the weights of the model from a file to the memory of the inference hardware)

- Model Inference (Feed the model with an input and predict/generate the output)

- Model Runtime Optimization - Quantization of model’s weights, fuzing layers, etc.

As of today, TensorFlow depends on several Python packages to train models (which in turn depend on even more packages), however, practically only some of these dependencies are used in production. To run inference on a model, a different subset is used.

The graph of the dependencies can be seen here:

<script src="https://gist.github.com/avioligo/95f7ddb3de2136a880fd6ffd180a02d5.js"></script>

[TensorFlow dependency graph (generated using pipdeptree)]

Is it possible to automatically distinguish between dependencies that our applications invoke, the dependencies that are only loaded to memory (imported), and the dependencies that are only installed but are not in use by our application?

Let’s look at the production environment of an AI company.

Executed Libraries

Below is an example of a conditional dependency, requests, that is imported only in some scenarios. The usage is different per use case and application, as the code below demonstrates:

.avif)

[Screenshot of Tensorflow codebase on GitHub]

As one can see, requests is imported only when using the URL parameter, and then an HTTP method is invoked.

Thanks to the runtime context, it is possible to determine whether the application invokes all or part of the functions that are loaded. We don’t have to guess. Instead, we can leverage runtime information to reduce noise and eliminate unnecessary security tasks, and hypothetical risks that are not exploitable in production.

Let’s look at an image-processing application called image-processor.

We know that an app named image-processor has called (executed) a vulnerable function within requests library, at least once. We are certain that the vulnerable function within requests is triggered in the current production pipeline, and moreover - the library version is vulnerable to CVE-2023-32681 (CVSS score 6.1).

Therefore, we automatically increase the risk of exploitability from ‘Medium’ to be ‘High’:

.avif)

Loaded Libraries

Meanwhile, in other places in the code, TensorFlow imports requests and loads it to memory as soon as TensorFlow is initialized:

.avif)

[Screenshot of Tensorflow codebase on GitHub]

Let’s look at a different application, called tickets-api. The tickets API also uses tf.keras module, which depends on requests. Inside the tickets API, requests is always imported with the library, yet it is not executed at all. Thanks to the runtime context, we know that in our production environment requests is not imported in this line.

Therefore, we automatically lower the risk of possible exploitation for CVE-2023-32681 (CVSS score 6.1), from Medium to Low:

.avif)

Installed Libraries

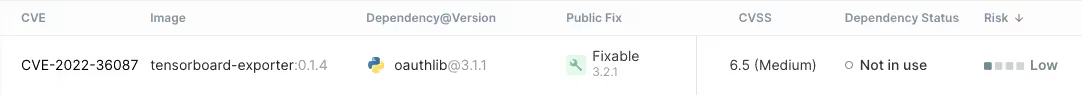

Most libraries, in this example, are not in use at all. It is important to distinguish between whether the vulnerable requests function is loaded to memory at all, where, and how exactly in our runtime. Let’s look at another conditional dependency of TensorFlow, oauthlib, which is only loaded and invoked if you use tensorboard as part of TensorFlow in your application to track experiments:

The figure above shows that oauthlib is an indirect dependency of tensorflow.

We can also tell that If you don’t use tensorboard in your application, oauthlib will never be loaded to memory, making CVE-2022-36087 (CVSS score 6.5) less urgent.

The runtime context of tensorboard-exporter shows that the vulnerable function within oauthlib is not loaded nor executed. if we judge is only based on the CVSS score, the risk is medium. But the risk is not indeed medium like the CVSS says.

Since the vulnerable function is never executed in our environment, in any real-world scenario, we automatically classify the risk as Low instead of Medium:

Loaded Libraries and the Impact of Partial Runtime Application Security Coverage

So we have a lot of dependencies in our code, but we use each individually, in a different way.

When products declare they support runtime application security, it usually means that the application typically uses operating system (OS) probes to gain the required visibility. These probes are hooks that can provide visibility to when your OS system opens a specific file or uses it. Probes come in different variations, but one thing to keep in mind is that they always have an impact on runtime performance. Essentially, probes can be thought of as an extra `if` statement that needs to be executed every time the code runs.

Probes are an essential way to identify a "loaded library" which means the OS has probably loaded the file to the memory. This, however, as demonstrated in the example above, does not mean it’s necessarily being used at all, or that the file is executed at any point during runtime. The only indication this provides users is that the file is prepared to run if it will need to be executed.

Executed Libraries with Deep Runtime Application Security Analysis

This is where Oligo differs from other solutions. Using a revolutionary patent-pending eBPF-based approach, that can provide kernel-level tracing and visibility - enabling true instrumentation of runtime, it’s now possible to have a real-time understanding of when a function is being called or a library is executed. This is the real difference between simply “loaded” libraries and practically “executed” files during runtime. Based on our telemetry data, statistics show that a mere 50% of files that are loaded during runtime are executed. This is an extremely important metric when it comes to engineering cognitive load.

.avif)

[Sample data from one of Oligo's environments. Approximately 50% are loaded, but only about 25% are actually executed.]

Knowing which libraries are actually in use, and are being executed down to the call stack, provides an added layer of filtration of CVE noise, with a much better and more accurate understanding of real security risk to your systems. By having real-time visibility, it’s possible to respond much more rapidly to truly exploitable threats as they arise within the exact vulnerable executed function––and not have to waste energy on loaded libraries that are not being executed at all.

Why This Matters to You

If we take as an example of a common OSS library, this contains hundreds of lines of code. This OSS library, though, has its own set of dependencies to run––doubling or tripling the amount of code coupled with this library. When you run your SCA scanner it explodes with CVE alerts, many of which are critical and high––and you don’t know where to get started with mitigating these risks.

By understanding which files are not just “loaded” to memory during runtime, but rather are being invoked and their functions executed, and at the same time understanding whether these executed functions have access to other sensitive data or systems––those are the vulnerabilities you will need to prioritize. In this way, you can then filter a significant amount of CVE noise from engineer’s backlogs.

Gain clarity and prioritize your security efforts by understanding which dependencies are actively invoked and pose a risk. By focusing on addressing real threats, you can ensure more effective security protection and rebuild developer trust, all while streamlining your engineering efforts.

.png)